Introduction (Elo for Chess Engines)

The evaluation of chess engine improvements relies on robust statistical methodologies to measure subtle strength differences, typically quantified using the Elo rating system. Developed by Arpad Elo, this system calculates relative skill levels in zero-sum games by modelling expected performance probabilities between opponents. When a chess engine’s expected score against a reference opponent is known, its Elo difference (ΔElo) can be derived through a logarithmic relationship: ΔElo = 400 × log₁₀(expected_score / (1 – expected_score)). This mathematical foundation transforms win/draw/loss outcomes into precise strength estimates, making it indispensable for evaluating algorithmic enhancements in engines like Wordfish 611.

The Sequential Probability Ratio Test (SPRT) revolutionises this evaluation process by providing an efficient framework for terminating tests once statistical confidence is achieved. Unlike fixed-length tests that waste computational resources, SPRT continuously updates likelihood ratios comparing two hypotheses: H₀ (the patch has negligible impact, e.g., ΔElo = 0) and H₁ (the patch exceeds a threshold, e.g., ΔElo = 5). This method dynamically adjusts test duration, typically concluding with 95% confidence when results cross predefined bounds calculated from tolerated error rates (alpha for false positives, beta for false negatives). Modern engines like Stockfish deploy SPRT universally, as it reduces testing time by 50-70% compared to traditional methods while guaranteeing statistically significant outcomes 13.

For developers comparing versions like Wordfish Base (stable) against Wordfish Dev (experimental), SPRT offers methodological rigour. The test requires careful parameterisation of Elo bounds (elo0, elo1), error tolerances (alpha, beta), and auxiliary components like opening books and time controls. A well-designed SPRT setup not only detects regressions or improvements but also quantifies effect sizes, enabling data-driven decisions about code integration. This tutorial explores these considerations systematically, establishing why SPRT has become the gold standard in computer chess despite its mathematical complexity 19.

- Statistical Efficiency: SPRT minimises Type I (false positive) and Type II (false negative) errors simultaneously, unlike fixed-length tests that fix one error type arbitrarily.

- Resource Optimisation: Tests terminate early for clear improvements/regressions, saving CPU years in large-scale projects like Stockfish’s distributed testing framework.

- Practical Implementation: Open-source tools like Cutechess-cli and Fast-chess implement SPRT natively, lowering adoption barriers through standardised commands.

Understanding the Elo System in Chess

Mathematical Foundations

The Elo system quantifies relative player strength through a probabilistic model where expected scores derive from rating differentials. The core formula expresses win expectation (E) between two players A and B as:

Eₐ = 1 / (1 + 10^((R_b – R_a)/400))

Here, Rₐ and R_b denote their respective ratings, and the constant 400 scales ratings so that a 200-point gap predicts a 76% win rate for the stronger player. This logarithmic relationship emerges from the assumption that player performances follow a normal distribution with standard deviation 200, though empirical evidence suggests logistic distributions better fit chess data 611.

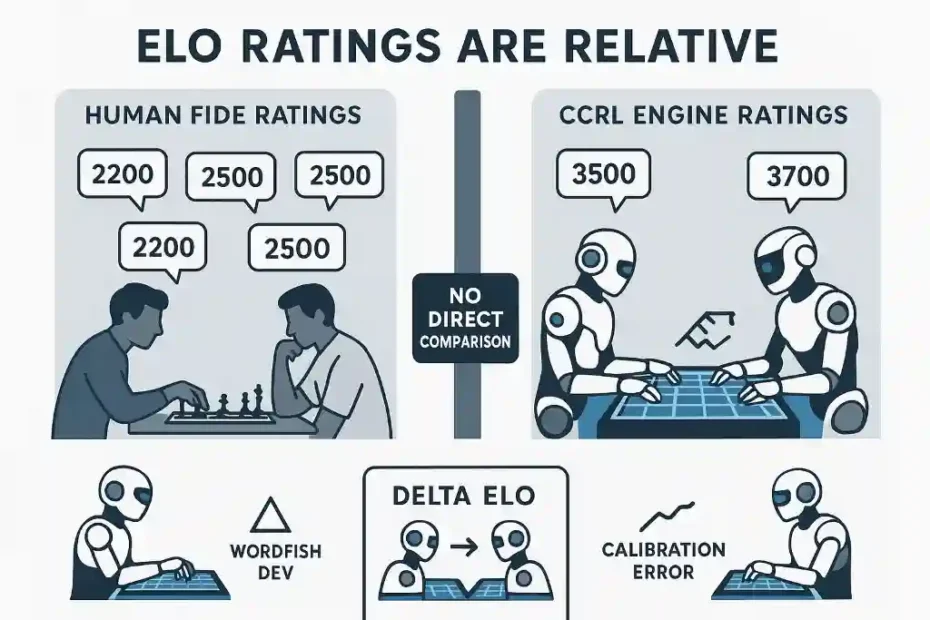

Crucially, Elo ratings are relative measurements confined to specific player pools. Engine ratings like those on CCRL (Computer Chess Rating Lists) exist on separate scales from human FIDE ratings due to divergent opponent sets. This relativity necessitates self-play testing when evaluating engine changes: Wordfish Dev must compete against Wordfish Base exclusively to isolate the impact of modifications. Cross-pool comparisons (e.g., Wordfish vs Stockfish) introduce calibration errors, rendering ΔElo calculations unreliable 67.

Performance Calculation

Post-game rating updates incorporate K-factors, which control volatility. In engine testing, typical K=32 values apply, though SPRT analyses often skip direct updates by computing theoretical ΔElo from score percentages. For a match yielding W wins, L losses, and D draws, the score percentage (S) is:

- S = (W + 0.5×D) / (W + L + D)

- ΔElo is then estimated as:

- ΔElo = 400 × log₁₀(S / (1 – S))

For example, 55% score translates to ΔElo ≈ 400 × log₁₀(0.55/0.45) ≈ 38.5 points. This equation becomes inaccurate at extreme scores (>80%), where Elo’s linear approximation breaks down. Hence, SPRT tests typically bound elo1 between 2-10 Elo to maintain modelling integrity 611.

Table: Expected Scores for Rating Differences

| Rating Gap | Expected Score (%) |

|---|---|

| 0 | 50.0 |

| 100 | 64.0 |

| 200 | 76.0 |

| 300 | 85.0 |

Fundamentals of SPRT (Sequential Probability Ratio Test)

Hypothesis Formulation

SPRT evaluates two mutually exclusive hypotheses:

- Null Hypothesis (H₀): The new version’s strength equals the base version’s strength plus a negligible margin (ΔElo = elo0, e.g., 0).

- Alternative Hypothesis (H₁): The new version exceeds the base version by a meaningful threshold (ΔElo = elo1, e.g., 5).

Developers specify elo0 and elo1 based on practical significance. For Stockfish, common settings are [0, 5] for long time controls (LTC) and [0, 2] for short ones (STC), reflecting tighter detection thresholds in faster tests. The interval [elo0, elo1] defines an “indifference region” where strength differences are deemed irrelevant; tests continue only while observed ΔElo remains ambiguous relative to these bounds 13.

Likelihood Ratios and Stopping Rules

After each game, SPRT updates the log-likelihood ratio (LLR):

LLR = log(Probability(Results|H₁) / Probability(Results|H₀))

Results are evaluated via trinomial distributions (win/draw/loss), with probabilities derived from Elo models. The LLR accumulates until crossing one of two thresholds:

- Upper bound (β): Accept H₁ if LLR ≥ ln((1-β)/α)

- Lower bound (α): Accept H₀ if LLR ≤ ln(β/(1-α))

Standard α=β=0.05 settings ensure ≤5% false positive/negative rates. Crucially, LLR sensitivity depends on elo1-elo0 width; narrower intervals (e.g., [0,2]) require more games than wider ones (e.g., [0,10]) but yield precise ΔElo estimates. This trade-off makes parameter selection critical: aggressive bounds accelerate tests but risk overlooking subtle improvements 13.

Setting Up an SPRT Test for Chess Engines

Parameter Selection

Table: Recommended SPRT Parameters by Engine Strength

| Engine Strength | Gainer Bounds [elo0, elo1] | Non-regression Bounds [elo0, elo1] |

|---|---|---|

| Top 30 engines | [0, 3] | [-3, 1] |

| Top 200 engines | [0, 5] | [-5, 0] |

| Weaker engines | [0, 10] | [-10, 0] |

- Time Controls: Use “tc=inf/10+0.1” (10 seconds + 0.1s increment) for STC or “tc=60+0.6” for LTC. Increments prevent time losses.

- Opening Books: Balanced books (e.g., “8moves_v3.pgn”) minimise draw rates; biased books (e.g., “UHO_Lichess.epd”) increase decisiveness.

- Concurrency: Match “concurrency” to physical cores (not threads) to avoid context-switching overhead 13.

Fast-chess Implementation Example

fast-chess -engine cmd=wordfish_dev name=dev -engine cmd=wordfish_base name=base \

-each tc=10+0.1 -rounds 20000 -repeat -concurrency 8 \

-openings file=UHO_Lichess.epd format=epd -sprt elo0=0 elo1=5 alpha=0.05 beta=0.05This tests Wordfish Dev vs Base with 8 threads, 10s+0.1s time control, and UHO openings. SPRT stops if ΔElo confidently exceeds 0 (H₁) or falls below 5 (H₀).

Avoiding Common Pitfalls

- Draw Rates: >60% draws reduce sensitivity; use unbalanced books or contempt settings.

- Hardware Variance: Run tests on homogeneous machines; cloud instances introduce unpredictable throttling.

- Version Control: Recompile both engines from identical commits except the patch under test 17.

Calculating Elo Differences in SPRT Tests

Interpreting LLR Trajectories

SPRT doesn’t directly output ΔElo but provides real-time LLR graphs indicating test progress. Developers infer strength differences indirectly:

- Positive LLR trend: Suggests H₁ (dev ≧ base + elo1) is likelier.

- Negative LLR trend: Favours H₀ (dev ≦ base + elo0).

For precise ΔElo estimation post-test, calculate score percentage (S) from final W/D/L counts:

ΔElo = 400 × log₁₀(S / (1 – S))

For example, 520 wins, 450 losses, and 1030 draws yield:

S = (520 + 0.5×1030) / (520 + 450 + 1030) = 1035 / 2000 = 0.5175

ΔElo = 400 × log₁₀(0.5175 / 0.4825) ≈ 12.2

Confidence Intervals

Since ΔElo is estimated empirically, compute 95% confidence intervals:

CI = ± z × √(S×(1-S)/N) × (400 / ln(10))

Where z=1.96 for 95% CI, and N=total games. For N=2000 and S=0.5175:

CI ≈ ± 1.96 × √(0.5175×0.4825/2000) × 173.7 ≈ ±14.3

Thus, ΔElo = 12.2 ± 14.3, confirming low precision with limited games. SPRT’s efficiency lies in terminating before precise ΔElo is known, focusing instead on exceeding practical thresholds 611.

Practical Example: Wordfish Base vs Wordfish Dev

Test Configuration

Suppose we test an NNUE update using:

- SPRT: elo0=0, elo1=5, α=β=0.05

- Hardware: 16-core AMD EPYC, 3.5GHz

- Time Control: 60s+0.6s (LTC)

- Openings: “Pohl.epd” (high-variance positions)

- Engines: Wordfish Base (commit a1b2c3), Wordfish Dev (commit d4e5f6 + NNUE patch)

After 15,000 games, SPRT terminates with LLR=2.94 (exceeding upper bound 2.94 > ln(0.95/0.05)=2.94), accepting H₁ (ΔElo ≥ 5). Final scores:

- Wins: 3150 (dev), 2850 (base)

- Draws: 9000

- S = (3150 + 0.5×9000)/15000 = 0.5433

- ΔElo = 400 × log₁₀(0.5433/0.4567) ≈ 30.1

Analysing Output Logs

Fast-chess outputs periodic updates:

Game 5000: LLR= -0.23 [ -2.94, 2.94 ]

Game 10000: LLR=1.87 [ -2.94, 2.94 ]

Game 15000: LLR=3.01 - H1 accepted The steady LLR climb indicates accumulating evidence for H₁. Visualising LLR trajectories helps diagnose flaky patches: erratic oscillations suggest opening bias or time management bugs 39.

Tools and Best Practices

Software Ecosystem

- Cutechess-cli: Legacy tool with robust error handling; supports multi-PGN opening books.

- Fast-chess: Modern replacement featuring pentanomial statistics (more efficient with draws) and JSON outputs.

- OpenBench: Distributed testing framework automating SPRT across volunteer hardware.

- pySPRT: Python library for simulating test durations pre-deployment 19.

Optimisation Strategies

- Opening Suites: Use 8-12 move unbalanced positions to reduce draw rates below 50%.

- Concurrency Scaling: For N cores, run N/2 games simultaneously to avoid hyperthreading penalties.

- Batch Sizes: Process games in blocks of 1000 to minimise disk I/O overhead.

- Early Termination: Configure engines to resign at -6.00cp or adjudicate draws at 5-fold repetitions 13.

Conclusion: The Art and Science of Efficient Engine Testing

SPRT has revolutionised chess engine development by enabling statistically rigorous evaluations with minimised computational costs. For projects like Wordfish, adopting SPRT means distinguishing 2-5 Elo improvements within days instead of weeks, accelerating innovation while preventing regressions. This efficiency stems from dynamic termination, which focuses resources on ambiguous patches while quickly accepting/rejecting obvious changes.

However, SPRT’s sensitivity to parameters demands thoughtful calibration. Bounds like [elo0, elo1] must reflect practical significance—setting elo1=2 for STC ensures only impactful patches pass, whereas elo1=5 suffices for weaker engines. Similarly, time controls must trigger the tested code paths; LTC tests are essential for evaluation changes, while STC exposes search instabilities. Developers should also monitor real-time LLR plots to detect anomalies early, like score plateaus indicating book bias or hardware stalls 13.

Looking forward, SPRT methodologies continue evolving. Integration with cloud platforms like OpenBench enables thousand-core tests, shrinking decision cycles to hours. Meanwhile, alternatives like Bayesian Elo estimation offer complementary approaches for post-hoc analysis. Yet SPRT remains unmatched for live testing, balancing statistical power with ruthless efficiency—a testament to its mathematical elegance and practical utility in the compute-intensive world of engine development 19.

References

- SPRT in Chess Programming (Chessprogramming Wiki) 1

- Rustic Chess Engine Testing Guide 3

- Elo Rating System (Wikipedia) 6

- Elo Calculator (Omni Calculator) 11

- Engine Testing Framework (Chessprogramming Wiki) 9

- Talkchess Discussion on Elo Estimation 7

Jorge Ruiz

connoisseur of both chess and anthropology, a combination that reflects his deep intellectual curiosity and passion for understanding both the art of strategic