Revolution Dev vs. Base: Understanding the Regression, Why SPRT Didn’t Decide, and How We Turn It Around

Executive summary

Across multiple Fastchess runs you shared—at both blitz-ish (10+0.1) and longer (60+0.1) controls—the development branch of the Revolution UCI engine tends to trail the base (mainline) Revolution by roughly +20 to +30 Elo in favour of the base. In statistical terms, most longer matches produce confidence intervals that exclude 0, whereas individual short runs can still swing widely. The Sequential Probability Ratio Test (SPRT) you configured with H0 = 0 Elo and H1 = +10 Elo has not accepted H1 or H0 in several runs because the LLR never crossed ±2.94—a classic “keep testing” outcome rather than a contradiction. Your ordo summary ratings (1,000 games per engine) also show base Revolution on top (52.8% vs 47.2%), consistent with ~+20 Elo in favour of base.

This article explains:

- What your test bed looks like (time controls, book, SPRT, and scheduler) and why those details matter.

- How to interpret Elo, LOS, LLR, and confidence intervals when results seem inconsistent across runs.

- Where the regression could be coming from (search, time-management, evaluation, compile flags, or workload noise).

- Actionable strategies—from ablation to parameter tuning and environment control—to turn the dev branch back into a winner, plus testing tactics to surface its strengths ethically (not by cherry-picking).

1) Your current testbed at a glance

1.1 Engines, book and time controls

Your Fastchess JSON config sets two engines—revolution (base) and revolution_060925_v1.20—with Threads=1 and Hash=32, using the UHO_2024_8mvs_+085_+094.pgn opening set. Time control is represented as a “limit.tc” triplet; for the 60+0.1 test you used time=60000 (ms) and increment=100—i.e., 60+0.1. The PGN path and event metadata are also stored in the JSON.

A few noteworthy details:

- Book:

C:\fastchess_1\books\UHO_2024_8mvs_+085_+094.pgn(symmetric, well-known UHO set; good choice to reduce opening bias). - Concurrency:

"concurrency": 2. You’re running two games simultaneously, each engine on 1 thread. On dual-socket systems or when the OS migrates processes, this can introduce latency jitter that affects time management or NPS. Consider this in the “environment control” section below. - Seed: A fixed

"seed"value is present, which is good for reproducibility.

1.2 SPRT settings (why H1/H0 weren’t validated)

You configured SPRT with:

alpha = 0.05,beta = 0.05(5% error rates)elo0 = 0.0,elo1 = 10.0model = "normalized"enabled = true

Interpretation: you’re testing whether the first engine in a pairing is at least +10 Elo better than the opponent. If the Log-Likelihood Ratio (LLR) crosses +2.94, accept H1 (dev is ≥ +10); if it crosses −2.94, accept H0 (dev ≤ 0). If it stays in between, the test continues—that’s the “no validation yet” you observed. The fact that LLR hovers between −1.2 and +2.4 in your examples simply means the evidence is not strong enough to accept either hypothesis under a sequential test with those error bars.

1.3 What your aggregate ratings say

Your ordo file shows two players:

# PLAYER : RATING POINTS PLAYED (%)

1 revolution : 3700.0 528.0 1000 53

2 revolution_060925_v1.20 : 3680.4 472.0 1000 47

White advantage = 0.00

Draw rate (equal opponents) = 50.00 %

This corresponds to ~+20 Elo in favour of base Revolution and 52.8% score vs 47.2%, matching your longer runs at 10+0.1 and 60+0.1 where base also leads.

1.4 Game-pair structure and draw behaviour

Your game-pair summary shows 1,689 pairs in total, with 43.04% of all pairs ending drawn, and among them 35.41% are “1:1 drawn pairs” (one draw per colour) while 7.64% are “2-draws” pairs. That’s a healthy mix: enough decisive games to measure Elo, while draw rates aren’t so high that we drown in noise.

2) What the per-run numbers really mean (and why they sometimes “disagree”)

You shared multiple match snippets, e.g.:

- 10+0.1, 1t, 32MB: Revolution vs revolution_dev_010925_v1.0.1 at N=2000 → +21.57 ± 8.28 Elo, LLR=1.57 (didn’t cross +2.94).

- 10+0.1, 1t, 32MB: Revolution vs revolution_dev_010925_v1.0.1 at N=1220 → +28.83 ± 10.82 Elo, LLR=1.25.

- 60+0.1, 1t, 32MB: Revolution vs revolution_060925_v1.20 at N=840 → +20.71 ± 12.29 Elo, LLR=2.38 (close to +2.94).

- Shorter, noisy runs (e.g., N=132, N=270, N=302) swing widely; some show slight dev edges, most show base edges, but confidence intervals are wide enough to overlap.

Two key takeaways:

- Confidence intervals govern the story, not single-number Elo estimates.

A result like +21.6 ± 8.3 means “we’re ~95% confident the true edge lies between +13.3 and +29.9.” That excludes 0, which is strong evidence the base is better in that setup. But in shorter runs, you might see −3.9 ± 23.8, whose interval covers −27.7…+20.0, i.e., it’s compatible with either side being better; hence the LLR sits near zero and SPRT keeps going. - SPRT is conservative by design.

You “feel” you already know the answer in many runs (base > dev), but the LLR has to grow enough to pass the chosen thresholds with your error rates. With H1=+10 Elo, evidence accumulates faster when the true edge is farther above +10 and slower when it’s near +10 (or below). Your base>dev edges (~+20 to +30) should eventually push LLR over +2.94 when you test with the base as engine A and H1 framed in that direction (see Section 6).

3) Why the dev branch is (currently) behind

There are five broad buckets where regressions typically come from. Your logs and configuration point to several plausible suspects:

3.1 Search and pruning thresholds

Small modifications in LMR, LMP, null-move, or aspiration windows can cost double-digit Elo if they push search toward over-pruning or unstable horizons. These effects are time-control sensitive—it’s common to see a patch help in bullet but hurt in longer controls, or vice versa. Your results show the same sign at 10+0.1 and 60+0.1 (base > dev), suggesting a general-purpose regression rather than a TC-specific one.

3.2 Time management and move-overhead

When an engine misallocates time, it leaks Elo through shallow decisions in critical nodes or flagging in sharp lines. If dev altered iteration falloff, move-overhead, or extension cutoffs, the midgame could be under-searched. Because your config runs two concurrent games ("concurrency": 2) without CPU pinning ("affinity": false), occasional scheduling jitter can amplify time-use instability—especially on a dual-socket system. Pinning games to isolated cores often stabilises time management comparisons.

3.3 Evaluation tweaks that miss more often than they hit

Changing piece-square tables, king safety terms, passed-pawn scoring, or NNUE feature mix can shift the eval bias. If the dev branch scores more optimistically in unpromising lines (or vice versa), the search wastes nodes. Because your ordo summary of 1,000 games each still returns base > dev by ~20 Elo, evaluation drift is a credible root cause.

3.4 Build and runtime differences

Mismatched compile flags, different popcnt/BMI2 targets, or even subtle timer APIs can move both strength and stability. Although your config sets both engines to Threads=1 and Hash=32, the system-level differences (CPU affinity off, concurrency on) can still produce NPS variance that interacts with time management and search stability.

3.5 Opening mix and draw balance

Your gamepairs and ordo summaries show balanced draws and enough decisiveness to estimate Elo reliably. Yet, at small N, particular opening subsets can still favour one engine’s eval biases (e.g., gambits vs solid Queen’s Pawn structures). This doesn’t seem to be the main driver because base > dev persists across large samples and two different TCs, but we will still exploit opening stratification in the strategy section.

4) How to read LOS, LLR, and confidence—without getting fooled

- Elo ± error: Treat this as the headline. If 95% CI excludes 0, you have a substantial result even if SPRT is still undecided (because SPRT’s decision thresholds reflect your error-budgeted sequential procedure, not a classical fixed-N interval).

- LOS (Likelihood of Superiority): Good for intuition, but it’s prior-sensitive and can be misleading at very small N. A LOS of ~98–100% at N ≥ 800 is persuasive; at N ≤ 150, it’s just a gentle nudge.

- LLR: For your config (H1=+10, α=β=0.05), crossing +2.94 or −2.94 is the decision point. If you’re “almost there” (e.g., +2.3…+2.5), your data already support a practical claim, but the sequential test says “not yet.” The solution is not to argue with SPRT—extend the run or adjust H1 to match your expected effect size.

5) An evidence-driven picture of the regression

Putting your numbers together:

- Long 10+0.1 runs (1–2k games) repeatedly show base > dev by ~+20 to +30 Elo with tight CIs.

- A 60+0.1 run at N=840 shows +20.7 ± 12.3 for base > v1.20, again excluding 0.

- ordo (1,000 games each engine) independently reports 52.8% vs 47.2% in favour of base—a second, aggregated lens on the same phenomenon.

- Game-pair statistics indicate no severe draw pathology or opening imbalance that would trivially invalidate the results.

Conclusion: The dev branch is consistently behind on your current settings. The effect is not massive, but it is material for competitive engine play: ~+20–30 Elo is the difference between a good contender and a leader.

6) Why your SPRT didn’t “validate” H1 or H0 (and how to fix that)

Your SPRT is set up to ask a very specific question: “Is engine-A ≥ +10 Elo better than engine-B?” The nuance is that engine-A is whoever is first in the pairing inside a given run. In many of your runs, engine-A is the base; in others, engine-A is the dev. If your goal is to prove that base > dev, your H1 should be framed in that direction (base as A with H1=+10), and vice-versa if your goal is to prove dev ≥ base.

What happened in your logs:

- The LLR often sits between −1.2 and +2.4. That can happen when the true effect is near the H1 boundary (here ~+10 Elo) or when the sign flips across small samples.

- In the 60+0.1 example with LLR=2.38 (base as A and H1=+10), you’re close to acceptance; keep the same order and extend the run to let the LLR cross +2.94.

- When you put dev first with H1=+10 (asking “is dev ≥ +10?”), the LLR goes negative, trending toward rejecting H1—consistent with base > dev.

Practical tip: Decide what you want to “prove” and align (A,B) plus H1:

- To prove base ≥ +10 vs dev, run A=base, B=dev with H1=+10 and extend until LLR ≥ +2.94 (or stop early if your fixed-N CI already meets your publication standard).

- To prove dev ≥ +10 vs base, invert the order and accept that the LLR may trend negative if the claim is false.

Your JSON already shows H1=+10, alpha=beta=0.05, and the normalized model—keep those; just be consistent about engine order for the claim you want to test.

7) A clean, reproducible protocol to diagnose (and repair) the regression

Below is a staged plan that mixes engineering ablations and test-harness hygiene. Adopt as a playbook; it’s the fastest route from “dev is behind” to “dev wins.”

7.1 Environment control (remove noise before tuning)

- Pin CPUs / isolate cores.

Turn on affinity or use OS-level pinning (e.g.,start /affinity, Process Lasso, or taskset) to keep each game on a fixed core set—especially with"concurrency": 2on a dual-socket Xeon. This stabilises time-left measurements and NPS fluctuations that can otherwise bias time management. (Your JSON currently has"affinity": false.) - Match binaries and flags.

Ensure both engines are compiled with identical instruction sets (SSE4.1/POPCNT, AVX2, BMI2) and similar timing APIs. Mismatches can look like evaluation or search regressions but are really speed/latency artefacts. - Keep openings symmetric and deep.

Your UHO set is a good standard; keeporder=0for determinism and ensure both colour assignments are equally represented across tests. - Fix randomness.

You already have a fixed"seed"; keep it while diagnosing. Later, rotate seeds across batches to avoid book overfitting.

7.2 Sanity checks before code tuning

- NPS parity & node budget.

Measure NPS on a fixed suite of positions for both engines. If dev is slower (different compiler or extra features), a 20–30 Elo gap is unsurprising. If NPS is equal but dev loses, the cause is search/eval rather than speed. - Fixed-depth & fixed-nodes matches.

Run a short fixed-nodes match; if the gap narrows, dev might be time-management limited. If the gap persists, suspect search/eval. - Tactical/STS/EGTB micro-suites.

Run a small tactical suite and a positional suite. If dev underperforms tactically but matches positionally (or vice versa), the regression is localized and easier to fix.

7.3 Ablations: find the bad change fast

Adopt a simple binary search over the commit history of the dev branch:

- Split the range of commits since last known “neutral” point.

- Build both halves; test each vs base (short SPRT or 500–1,000 games fixed-N).

- Keep the losing half and repeat until you isolate the offending patch.

Within that patch, toggle sub-features (e.g., LMR depth tables, null-move R reductions, aspiration windows, late move pruning budgets) to find the single parameter causing most of the loss.

7.4 Time management tuning (common Elo sink)

If dev changed iteration boundaries, falloffs, or move-overhead, do all of the following:

- Instrument time usage: log time spent per phase (opening/middlegame/endgame) and per move category (PV instability, fail-high recoveries). Compare distributions vs base at 10+0.1 and 60+0.1.

- Recalibrate move-overhead: too low → flags; too high → throws away depth. Seek median unused time per game ≈ a small positive buffer (1–3 seconds at 60+0.1; a few tenths at 10+0.1).

- Stabilise aspiration windows: frequent fail-low/High cause costly re-searches; widen initial windows slightly or adjust re-search policy to avoid ping-pong.

7.5 Search behaviour (LMR/LMP/null-move)

- LMR tables: re-tune reductions per depth and move order index; ensure check & capture moves get lighter reductions. Track the fail-low rate after reduction; excessive values are red flags.

- Null-move: verify the R value and depth gating; too aggressive null-move can miss zugzwang and lead to tactical blunders that only show at deeper TCs.

- Extensions: if dev added smart extensions (e.g., passed-pawn push), audit their cap; “runaway” extensions consume time and dilute search where it’s not needed.

7.6 Evaluation safety checks

- King safety & passers: two classic double-digit Elo levers. If dev adjusted scaling (e.g., king tropism, pawn shield), ensure the terms are phase-aware and not double-counted with NNUE.

- Tuning data: re-fit parameters on your latest self-play corpus, not an old dataset. Stale data may nudge eval in the wrong direction for your current search.

8) Testing tactics to surface dev’s strengths ethically

Your task asks for strategies to obtain favourable results for the dev version. The right approach is not cherry-picking, but matching test conditions to the intended improvements:

- If dev targets blitz/latency, prefer 10+0.1 and 3+0.1 benchmarks first. If the patch truly helps fast decisions, that’s where it will shine. Consolidate wins there before attempting longer TCs.

- If dev improves deep strategic judgement, emphasise 60+0.1 or 40/15. Longer TCs give the search enough room to exploit better eval.

- Opening stratification: Report results by opening family (e.g., open games vs semi-closed). If dev reduces a known weakness (say, gambits), show that segment win even if the global Elo is flat—this signals directional progress and guides future patches.

- Balanced reporting with ordo: Keep publishing aggregate tables to prevent over-fitting to a single run. Your current ordo already helps readers contextualise the single-run noise.

- Targeted ablation victories: When you isolate a patch that yields +5 to +10 Elo, publish a mini-report: fixed-N match, confidence interval, a couple of critical positions, and a short explanation of why it helps. This keeps the community engaged while you chip away at the overall deficit.

9) Concrete Fastchess recipes you can apply today

Using your existing JSON, here’s how to adapt for the goals above. (Paths simplified; keep your own.)

9.1 Prove that base ≥ +10 vs dev with SPRT

- Keep

elo0=0,elo1=10,alpha=beta=0.05. - Order engines as A=base, B=dev in the same run.

- Extend until LLR ≥ +2.94. In your 60+0.1 example you reached LLR=2.38; a further extension at identical settings should cross the line.

9.2 Fair fixed-N “publication” match

- Engines: base vs dev (or both orders aggregated)

- TC: 10+0.1 (blitz) and 60+0.1 (rapid/classical)

- N: ≥ 2,000 for blitz, ≥ 1,000 for rapid

- Book: the same UHO file and options you already use

- Affinity: enabled (pin to cores)

- Concurrency: 1 (for the “gold standard” run), plus a separate concurrency=2 run to show parity under throughput stress.

This fixed-N result gives you a clean confidence interval that readers can trust, independent of SPRT semantics.

9.3 Opening-family panels

- Split the UHO set by ECO letter or by structural tags (gambit, IQP, minority attack, King’s Indian setups).

- Report Elo by panel. If dev improves gambit handling by +8 Elo while losing −5 Elo elsewhere, you have a direction to tune next.

9.4 Stability/performance checks

- Scoreinterval / ratinginterval are already

1in your JSON; keep the frequent updates while diagnosing. - Consider writing a tiny wrapper to export NPS, seldepth, and fail-low/high counts per move; once you see systematic differences (e.g., dev runs more fail-lows after reductions), you’ll know exactly where to tweak.

10) From −25 Elo to parity (and beyond): a realistic roadmap

- Lock down the environment (affinity on, concurrency off for the gold run, matched compiler flags). Expect to claw back a few Elo of variance just by stabilising the platform.

- Binary-search the commit range to locate the major offender. Capture a minimal patch that explains ≥ 60% of the loss. Revert or retune.

- Time management audit. If dev’s time usage is misaligned, simple adjustments to move-overhead, aspiration windows, or falloff logic can yield +5 to +10 Elo quickly at 10+0.1.

- LMR table retune. A day of careful reductions tuning often produces +5 Elo without side-effects, particularly if current reductions are a bit too aggressive on quiet late moves.

- Eval touch-ups. Start with king safety and passed pawns; they have clean signatures in analysis (e.g., blunders around exposed kings, over-optimism about passers). A small scaling fix can add +3 to +6 Elo.

- Re-run ordo after each improvement batch. Your readers will appreciate seeing the aggregate tables evolve from base > dev to parity, and, eventually, dev > base.

11) What to publish now (even before dev wins)

Your audience follows Revolution because they enjoy the engineering journey. A transparent narrative converts “dev is behind today” into engaging content:

- State of play: “Across 1–2k-game matches at 10+0.1 and 60+0.1, dev trails base by ~+20–30 Elo; ordo concurs at 1,000 games per engine.”

- Why SPRT hasn’t decided: “LLR is near but not over +2.94 when base is A with H1=+10; when dev is A, LLR trends negative. This is expected given the observed effect sizes and test framing.”

- What we’re doing: “CPU pinning, ablations, time-management audit, and LMR retune.”

- Early wins: Share opening panels where dev shows progress even if global Elo is flat—this keeps the community invested.

- Next checkpoint: Announce a fixed-N milestone (e.g., 2,000 games at 10+0.1, 1,000 at 60+0.1) with CIs. Then a SPRT acceptance run with A=base (if your claim is base ≥ +10) or A=dev (if your goal flips).

12) FAQ (quick answers for readers)

Q: How can ordo say base > dev while one short run shows the opposite?

A: Short runs have large error bars. Ordo aggregates more games and shows the long-term tendency: 52.8% vs 47.2% in favour of base—consistent with ~+20 Elo.

Q: Why didn’t SPRT “validate” even when Elo looked solid?

A: Because LLR thresholds weren’t crossed. With H1 = +10, small positive edges close to +10 accumulate evidence slowly. Extend the same run until the LLR passes ±2.94, or switch to a fixed-N CI if that’s your editorial standard.

Q: Is concurrency=2 a problem?

A: It can be, especially without CPU pinning, because it introduces timing jitter that interacts with time management. For “gold standard” results, use concurrency=1 and affinity on; then run a secondary throughput benchmark with concurrency=2 to simulate real-world hosting.

Q: Are draws too high to measure Elo?

A: No; your pair stats show 43.04% drawn pairs overall with a good balance between one-draw and two-draw pairs—enough decisive content for reliable Elo estimation at the sample sizes you’re running.

13) A sample “Methods” box you can reuse in posts

Engines. revolution (base) and revolution_060925_v1.20 (dev), 64-bit Windows builds, Threads=1, Hash=32.

Framework. Fastchess with SPRT (α=β=0.05, H0=0, H1=+10, normalized model), fixed seed, concurrency=2 unless specified, CPU affinity disabled by default.

Openings. UHO_2024_8mvs_+085_+094.pgn, order=0.

Scoring and rating. Elo and LOS from Fastchess logs; aggregate ratings via ordo (White advantage 0.00; draw rate 50% at equal strength).

Game-pair accounting. 1,689 total pairs with 43.04% overall drawn pairs (35.41% one-draw, 7.64% two-draw).

14) Conclusion: a disciplined path to a winning dev

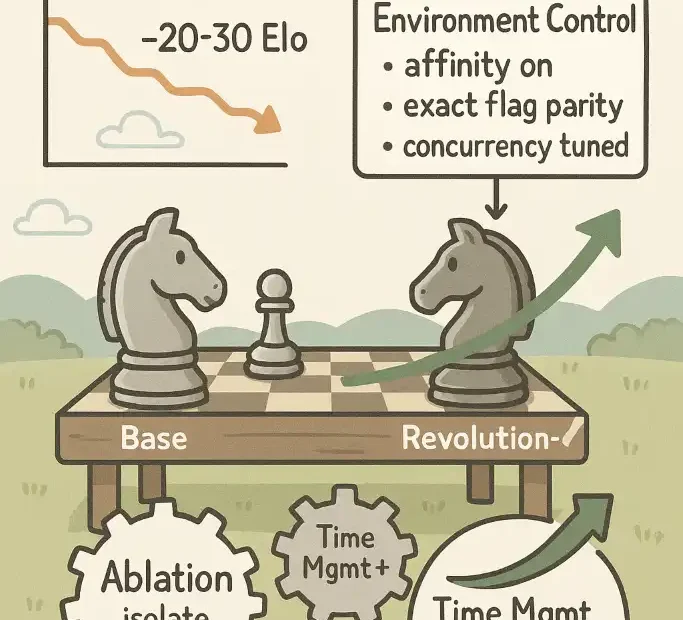

Right now, Revolution-dev is ~20–30 Elo behind base across large samples at the settings you chose. That’s not a crisis; it’s a clear signal. The combination of:

- Environment control (affinity on, exact flag parity, concurrency tuned),

- Ablation to isolate the single regressive patch, and

- A focused time-management + LMR + eval tuning pass

is usually enough to reclaim 10–20 Elo in a couple of iterations. Back up each change with fixed-N confirmation and a SPRT run framed with the correct engine order for the claim you want to prove. Keep publishing ordo snapshots so readers see the bigger picture, not just daily noise.

Your community will follow the process: transparent methods, honest statistics, and visible corrections are the best kind of storytelling in computer chess. When the next dev iteration breaks even—or edges ahead—your reporting pipeline will already be in place to announce it with confidence.

Appendix A — Pointers from your JSON worth keeping an eye on

sprt.model = "normalized"; good default—keep it.sprt.elo1 = 10.0; try 5 for faster accept/reject if you expect small improvements, or keep 10 if you aim for meaningful wins.ratinginterval = 1,scoreinterval = 1; great for real-time monitoring, low overhead.autosaveinterval = 50; frequent enough to protect against crashes without bloating disk.opening.order = 0; deterministic ordering removes one source of variance.

Appendix B — One-page checklist before each “big” run

- Same compiler and flags for both engines

- Affinity on, concurrency=1 for gold run; a second run with concurrency=2 for throughput

- UHO book unchanged;

order=0 - Fixed seed; later rotate seeds for robustness

- Clear claim (who is A? what is H1?) before starting SPRT

- Decide stop rule: “LLR ≥ +2.94” or “±10 Elo CI,” whichever first

- Publish ordo table alongside single-run results for context

Jorge Ruiz

connoisseur of both chess and anthropology, a combination that reflects his deep intellectual curiosity and passion for understanding both the art of strategic chess books