strength of chess engines

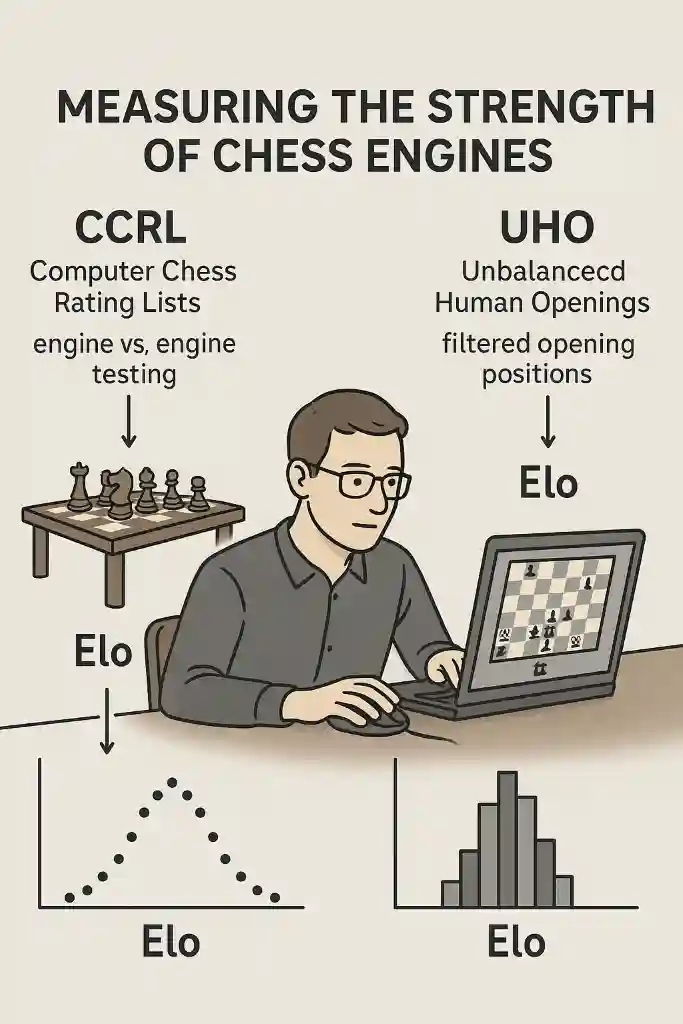

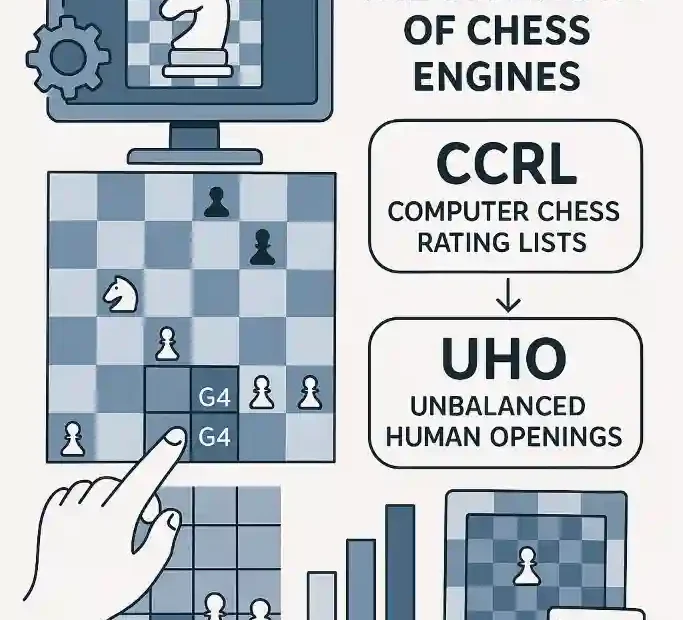

Measuring the strength of chess engines is a pivotal endeavour in both academic research and practical engine development. With the rapid pace at which engine evaluation and hardware advance, precise and reproducible testing methods are essential to benchmark improvements, compare disparate architectures, and guide future innovation. Two prominent approaches stand out in the modern landscape: the Computer Chess Rating Lists (CCRL), a long‑established, community‑driven framework for large‑scale engine versus engine testing, and the Unbalanced Human Openings (UHO) suite, pioneered by Stefan Pohl, which emphasises human‑played, filtered opening positions coupled with advanced scoring methods to enhance Elo reliability. By examining the philosophy, methodology, and practical implementation of these two systems, one can gain a comprehensive understanding of how to quantify chess‑engine strength with rigour and nuance.

The CCRL project was founded in 2006 by a group of enthusiasts seeking a collaborative, transparent platform for engine testing. Their core objective is to produce rating lists under standardised conditions—such as fixed opening books, time controls calibrated to a reference CPU, and tablebase integration—so that results become comparable over time and across different hardware setups. CCRL’s infrastructure includes Bayeselo-based Elo calculations, likelihood‑of‑superiority (LOS) statistics, cross‑tables for head‑to‑head comparisons, and downloadable game databases organised by engine, ECO code and other parameters.

human‑played openings

In contrast, the UHO suite, developed by Stefan Pohl, originates from the observation that many in the chess‑engine community prefer “pure” human‑played openings over artificially constructed or manually modified lines. Pohl’s Unbalanced Human Openings consist exclusively of positions drawn from high‑quality human games (both sides ≥ 2200 Elo), filtered by engine evaluation to create opening sets with predetermined material or positional imbalances. These imbalances intentionally reduce draw ratios and widen Elo spreads, thereby improving the statistical reliability of engine comparisons with fewer games. Moreover, UHO introduces custom scoring systems—such as Advanced Armageddon Scoring (EAS) and the Gamepair Rescorer Tool—to further refine Elo estimation in tournaments featuring extreme strength disparities.

This article explores these two paradigms in depth. The first main section dissects the CCRL methodology, detailing testing conditions, data analysis techniques and available tools. The next section delves into the UHO suite: its creation process, filtering criteria and rescoring mechanisms. A comparative analysis then highlights the key differences, including handling of draw ratios, computational requirements, and Elo reliability. Finally, practical guidelines will assist readers in setting up their own engine tests under both systems, followed by an objective conclusion on the advantages of each approach. Through this exploration, you will be equipped with the knowledge to select or design the most appropriate testing methodology for your engine‑strength measurement needs.

CCRL

The Computer Chess Rating Lists (CCRL) represent a collaborative, transparent project aimed at generating robust Elo ratings for chess engines by running extensive engine‑versus‑engine matches under uniform conditions. Established by Graham Banks, Ray Banks, Sarah Bird, Kirill Kryukov and Charles Smith in 2006, CCRL now includes a team of testers who contribute hardware, run matches and aggregate results on a central website. The core principles of CCRL testing can be summarised as follows:

1. Standardised Opening Book

- Utilises a generic opening book, typically limited to 12 moves per side, to ensure reproducibility across tests.

- Books are derived from public databases and remain identical for all engines, avoiding engine‑specific book bases.

2. Fixed Hardware Calibration

- CCRL’s benchmark CPU is an Intel i7‑4770k. Time controls for other machines are calibrated by comparing Stockfish 10 performance on the reference hardware, then adjusting the allocated time on test machines to achieve equivalent strength.

- This calibration ensures that engine comparisons across diverse systems remain meaningful and that Elo differences reflect engine logic rather than hardware variance.

3. Time Controls and Tablebases

- Different lists cater to various pace categories:

- CCRL 40/15 uses an equivalent of 40 moves in 15 minutes per side.

- CCRL Blitz employs 2 minutes + 1 second increment per move.

- CCRL Fischer Random (FRC) and other variants address non‑standard formats.

- Pondering is disabled (“ponder off”) to maintain consistent CPU usage per move.

- Endgames are adjudicated using syzygy tablebases, typically up to 5 pieces (3–4–5 EGTB), removing uncertainty in theoretical endgames.

4. Large‑Scale Game Generation

- CCRL runs over two million games across all engines in a given list, ensuring that statistical fluctuations average out. For instance, the CCRL 40/15 list on 14 April 2025 contained 2,078,348 games among 4,131 engine versions, yielding a draw ratio of 48.1% and a White score of 54.7% citeturn4view0.

- Games are stored in PGN format and made available for download, both with and without engine comments, facilitating independent analysis.

5. Elo and Statistical Analysis

- CCRL employs the Bayeselo algorithm to compute Elo ratings from match results, which accounts for statistical uncertainty and head‑to‑head performance.

- The Likelihood of Superiority (LOS) metric quantifies the probability that one engine genuinely outranks another, offering an intuitive statistical gauge alongside Elo differences.

- Cross‑tables and filtered lists (by ECO code, opening, monthly games) allow testers to explore specific engine interactions and opening‑based performance.

6. Transparency and Community Involvement

- Testers adhere to guidelines but retain freedom in choice of engines and minor parameters, ensuring both standardisation and broad participation.

- All raw data—games, statistics, engine listings—are published openly, inviting scrutiny, replication, or extension by the wider chess community citeturn1view0.

Through these methods, CCRL delivers a comprehensive and reproducible framework for measuring engine strength, particularly well‑suited to scenarios where maximum statistical confidence and long‑term comparability are priorities. By standardising hardware calibration, opening books and endgame adjudication, CCRL minimises extraneous variables, focusing Elo differences squarely on engine prowess.

UHO

The Unbalanced Human Openings (UHO) suite, developed by Stefan Pohl, offers a contrasting paradigm centred on human‑played opening positions with deliberate imbalances to reduce draw rates and widen Elo spreads. Inspired by the chess community’s conservatism regarding “non‑standard” openings, Pohl sought to create fully human‑sourced opening sets that provide a clear White advantage, without any artificially constructed lines or castling restrictions. The UHO methodology comprises several key components:

1. Source Data: Megabase Filtering

- UHO 2024 openings derive from Megabase 2024, a comprehensive collection of human games, with an Elo threshold lowered to 2200 for both players (down from 2300 in UHO 2022) to increase variety and size citeturn0view0.

- Games are sorted by combined player Elo, truncated after 6 or 8 moves (12/16 plies), and filtered to retain only unique end‑positions where both queens remain on the board.

2. Engine Evaluation Filtering

- Stefan Pohl uses KomodoDragon 3.3 to evaluate every end‑position (10 seconds per position on an 8‑core AMD Ryzen CPU), retaining only those with engine evaluations within defined intervals (e.g., [+0.85;+0.94] pawns for UHO 2024) citeturn0view0.

- This process yields multiple opening sets with incrementally increasing White advantage, each labelled by its eval‑interval and depth (6‑moves, 8‑moves, and “big” 8‑moves files).

3. Draw‑Ratio Control

- By selecting eval‑intervals, test organisers can adjust the expected draw ratio of engine matches: higher eval intervals yield a lower draw rate, typically decreasing draw percentage by ~5% per stage.

- Recommended usage begins with the [+0.85;+0.94] set (draw ratio ~56.9%), moving to higher‑advantage sets if the environment is excessively drawish (e.g., homogeneous engine matches on fast hardware).

4. Advanced Armageddon Scoring (EAS)

- Recognising that simple scoring (1 point for win, 0.5 for draw) skews results when starting positions inherently favour White, Pohl introduced Advanced Armageddon Scoring, which rescores games to rebalance starting inequities.

- EAS assigns asymmetric point values—often boosting Black draws or penalising White draws—to normalise expected scores across opening sets, yielding more comparable Elo spreads even under disparate starting conditions.

5. Gamepair Rescorer Tool

- For tournaments featuring engines with extreme Elo differences, the Gamepair Rescorer Tool pairs each opening line twice (Engine A as White vs Engine B as Black, and vice versa), then rescales results using a game‑pair method.

- This tool reduces variance in Elo calculation by ensuring each opening line contributes symmetrically, improving reliability with fewer games—a crucial advantage when engine resources are limited.

6. Fully Human and Unmodified

- Unlike some unbalanced opening experiments that incorporate constructed lines or castling restrictions (e.g., Pohl’s earlier NBSC openings), UHO sets are 100% human‑played and untouched, aside from the eval‑based filtering.

- This preserves the natural structure of human games while still achieving the desired imbalance for testing purposes.

7. Practical Outcomes

- In Pohl’s own tests (e.g., Stockfish 16 vs Torch 1, 3 min+1 sec, 1,000 games), UHO 2024 sets yielded scores around 61–62% for White, Elo differences of +80 to +92, and draw rates tunable between 40.3% and 56.9% depending on the chosen eval stage.

By leveraging human‑played positions, engine‑based filtering, and specialised rescoring, the UHO suite provides an alternative to traditional engine‑versus‑engine matches. Its emphasis on draw‑ratio management and Elo reliability with fewer games makes it particularly appealing for rapid testing cycles, resource‑constrained environments, or scenarios where starting‑position bias must be strictly controlled.

CCRL and UHO methodologies

When deciding between CCRL and UHO methodologies, one must consider testing objectives, resource availability, and desired statistical confidence. The following comparative analysis outlines the key differences, before detailing a step‑by‑step guide for implementing each:

Key Differences

- Opening Paradigm

- CCRL: Uses a uniform, generic opening book (up to 12 moves), ensuring standardisation and comparability across engines.

- UHO: Employs human‑sourced, eval‑filtered openings with predetermined imbalances, designed to reduce draws and amplify Elo spreads.

- Draw Ratios and Elo Spreads

- CCRL: Draw ratio emerges naturally (e.g., ~48.1% in CCRL 40/15), requiring large game samples (>2 million) to achieve stable Elo estimates.

- UHO: Draw ratio is adjustable (45%–60%) via eval intervals, allowing fewer games (e.g., 1,000–3,000) to yield comparable Elo confidence.

- Scoring Adjustments

- CCRL: Traditional scoring (1/0.5/0) combined with Bayeselo and LOS analyses.

- UHO: Offers bespoke rescoring tools (EAS, Gamepair Rescorer) to correct for starting‑position bias and produce more linear Elo distributions.

- Hardware and Time Controls

- CCRL: Relies on rigorous CPU calibration, comparing against Stockfish 10 on an Intel i7‑4770k citeturn4view0.

- UHO: Does not mandate specific hardware; users select time controls (e.g., 3 min+1 sec) appropriate to their engines and resources, focusing on opening imbalance rather than hardware normalisation.

- Data Transparency

- Both systems publish raw game data, but CCRL emphasises exhaustive libraries (millions of games), whereas UHO provides filtered opening files and evaluation metadata for user‑driven analysis.

Step‑by‑Step Guide: CCRL Testing

- Prepare Hardware & Calibration

- Benchmark your CPU against the CCRL reference (Intel i7‑4770k) using Stockfish 10, and determine the equivalent time control.

- Acquire Opening Book

- Download the CCRL generic book (up to 12 moves).

- Configure Engine Matches

- Disable pondering; enable EGTB support up to 5 pieces.

- Set time controls (e.g., 40/15 or 2+1).

- Run Matches

- Generate at least several thousand games per engine pairing; aim for aggregate totals > 1 million to reduce noise.

- Collect & Aggregate Results

- Export PGN files; upload to a central repository or local database.

- Compute Statistics

- Use Bayeselo to calculate Elo ratings; extract LOS metrics.

- Generate cross‑tables, ECO stats and opening reports as needed.

Step‑by‑Step Guide: UHO Testing

- Download UHO 2024 Files

- Select an eval‑interval set (e.g., [+0.85;+0.94] 6‑moves).

- Configure Engine Matches

- Use your preferred time control (e.g., 3 min+1 sec); pondering off is recommended.

- Run Matches

- Play each engine pairing on the chosen UHO opening file; 1,000–3,000 games typically suffice.

- Apply Rescoring

- Use the Gamepair Rescorer Tool to symmetrise openings; optionally apply Advanced Armageddon Scoring to correct bias.

- Analyse Elo & Draw Ratio

- Compute Elo via standard formulas or Bayeselo; verify draw ratio remains within 45%–60%.

- Iterate if Necessary

- If draw ratio is too high or low, switch to a higher or lower UHO stage to recalibrate.

By aligning your resources, objectives and desired confidence levels with the strengths of each system, you can choose the most efficient and statistically sound approach for your engine‑strength measurements.

In the domain of chess‑engine benchmarking, no single methodology universally outperforms every other; rather, each approach excels under specific circumstances. The CCRL framework offers unparalleled statistical rigour through massive game volumes, standardised hardware calibration and transparent rating calculations. This makes it the gold standard when the goal is a comprehensive, definitive ranking of engines over time, especially when hardware homogeneity or cross‑machine comparability is paramount.

rapid testing and resource constraints

Conversely, the UHO suite shines in contexts where rapid testing and resource constraints are critical. By leveraging human‑played positions, eval‑filtered imbalances and custom rescoring, UHO achieves Elo reliability with far fewer games, reducing computational expense and time. Its flexible draw‑ratio management suits environments—such as Fishtest servers or academic experiments—where dynamic hardware and heterogeneous engine strengths necessitate adaptive opening sets to maintain informative results.

When choosing a method, consider:

- Available computational power: CCRL demands millions of games; UHO thrives on thousands.

- Need for hardware normalisation: CCRL’s calibration ensures comparability; UHO assumes local time controls suffice.

- Importance of minimal draws: UHO’s adjustable sets directly target draw ratio; CCRL relies on natural engine competition.

- Bias correction: UHO provides tools (EAS, Gamepair Rescorer) to mitigate opening imbalance; CCRL treats all book lines equally.

In my objective assessment, for long‑term monitoring of engine progress on dedicated hardware farms, CCRL remains the superior choice, offering depth, continuity and community‑validated reliability. However, for rapid prototyping, comparisons under extreme Elo disparities, or settings where game count must be minimised, the UHO suite presents a compelling, efficient alternative. Its combination of human authenticity and engine‑driven filtering strikes a balance between real‑game relevance and statistical robustness. Ultimately, the best method depends on your specific goals: maximum statistical certainty favors CCRL, while lean, adaptive testing favors UHO.

Bibliography

- Stefan Pohl, “Unbalanced Human Openings 2024,” SP‑CC, accessed April 17, 2025, https://www.sp-cc.de/uho_2024.htm

- CCRL Team, “Welcome to the CCRL (Computer Chess Rating Lists) website,” ComputerChess.org.uk, accessed April 17, 2025, https://computerchess.org.uk/ccrl/

- CCRL 40/15 Rating List — Testing summary and methodology, accessed April 14, 2025, https://computerchess.org.uk/ccrl/4040/

- CCRL Blitz Rating List — Testing summary and methodology, accessed April 11, 2025, https://computerchess.org.uk/ccrl/404/

Jorge Ruiz Centelles

Filólogo y amante de la antropología social africana

Your article helped me a lot, is there any more related content? Thanks!

Thank you very much. You’re welcome into the blog of website chess computer. I agree, you words.