Note

Note: This article explains both the theory and concrete code-level changes you can try in Stockfish’s source tree (files such as search.cpp, movepick.cpp, tt.cpp, main.cpp, engine.cpp and uci.cpp). Where web sources are relevant I cite them; because Stockfishs code and line numbers change often, I point to functions and small code diffs rather than rely on fragile absolute line numbers. Before applying changes, back up your repository and test thoroughly. Key references used for this article include the Stockfish repository and the Stockfish developer wiki, plus canonical pages on pruning and heuristics. (GitHub, Stockfish, Chessprogramming)

Introduction — what “search speed” means, why it matters

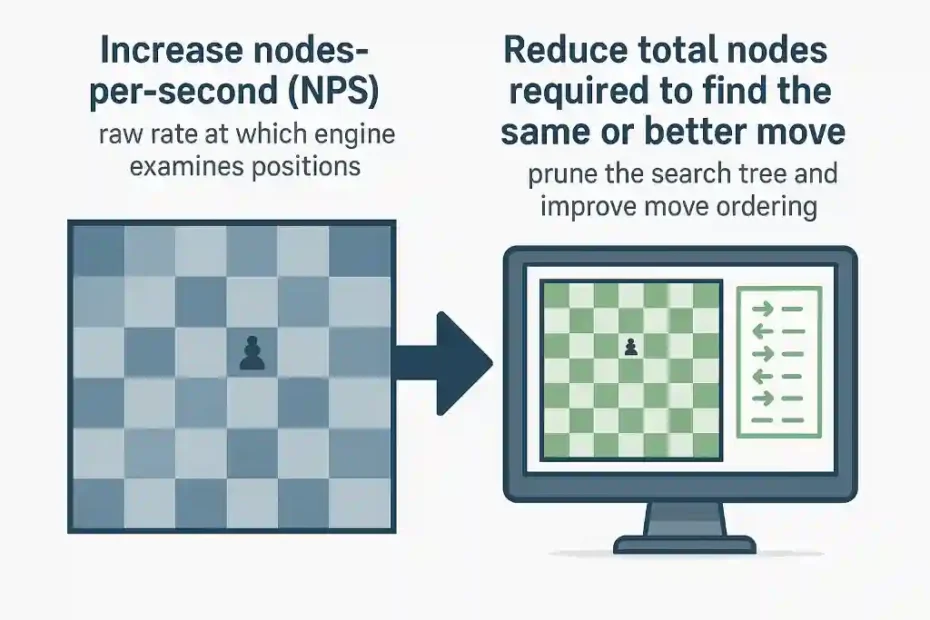

When we say we want to speed up Stockfish’s search, we mean one of two related things:

- Increase nodes-per-second (NPS) — the raw rate at which the engine examines positions (useful for hardware-bound gains and micro-optimisations).

- Reduce total nodes required to find the same or better move — i.e. prune the search tree and improve move ordering so the engine reaches an equally strong decision while exploring far fewer nodes (algorithmic or heuristic gains).

Both approaches are valid and complementary. Real-world Stockfish improvements usually combine many small micro-optimisations with changes to move ordering, pruning heuristics and the transposition table policy to reduce effective search size. The Stockfish project has always balanced algorithmic improvements (new heuristics or safer forward pruning) with engineering optimisations (faster bitboard operations, memory layout, SIMD, compiler flags). The official repo and developer docs are the canonical place to see current implementations and guidelines. (GitHub, Stockfish)

Why this distinction matters: micro-optimisations — faster popcounts, inlined functions, cache-friendly structs — raise NPS and are reliably measurable on the same hardware with little risk to playing strength. Algorithmic changes — more aggressive late move pruning (LMP), null-move depth reductions, different history heuristics — change the search tree topology and can gain speed dramatically but may alter playing strength if not tuned carefully. The practical engineer’s workflow pairs both: profile to find CPU hotspots, apply micro-optimisations where they give win/win (faster without changing behaviour), and then carefully test algorithmic changes using automated self-play or bench suites to measure any ELO/regression impact. The Stockfish developer pages and community discussions provide context for which heuristics are “safe” and which have been historically risky or controversial. (Stockfish, Chess Stack Exchange)

Measurement is essential. Without reproducible measurement you will not know whether a change helps or harms. Useful metrics are:

- Nodes searched and nodes per second (NPS) (report both).

- Wall-clock time for a fixed depth or fixed time search.

- Win/loss or ELO difference from regression testing (self-play or bench suites such as tests in the Stockfish repo). (GitHub)

This article focuses on practical, replicable interventions grouped in three buckets:

- Algorithmic and heuristic optimisations: move ordering (history heuristic, hash move priority), pruning (null move, LMP, futility), transposition table policy. These change the number of nodes explored, and — when tuned — produce the largest effective speedups.

- Code-level and file-targeted changes: micro-optimisations in

search.cpp,movepick.cpp,tt.cpp,main.cpp,engine.cppanduci.cpp— improved inline hints, reduced locks, cache-friendly layouts, eliminating unnecessary work. - Build, platform and runtime tuning: compiler flags, CPU-specific intrinsics (popcount, builtin bitops), proper use of thread pools, and profiling/benchmarking methodology.

Throughout I show concrete code snippets and small patches (original vs. modified) and explain where to put them so a reader with a simple editor (Notepad++, Visual Studio Code) can apply them. Because Stockfish is actively developed, absolute line numbers change fast; instead I reference function names and code regions (for example, Search::search(), moveOrdering() in movepick.cpp, or TT::probe() in tt.cpp) and attach GitHub references for verification. (GitHub)

Before making any change, clone the Stockfish repo, build a baseline binary and record baseline metrics on your hardware:

git clone https://github.com/official-stockfish/Stockfish.gitandgit checkout master.- Build with the same flags you will use later (see the Build section).

- Run a few fixed-depth tests (e.g. a set of classic test positions or Stockfish’s own tests) to measure baseline NPS and node counts. Stockfish includes test suites in the repo. (GitHub)

Finally: be conservative with heuristics that prune aggressively. Always pair a new heuristic or more aggressive pruning with regression testing and time-based matches (self-play) to confirm playing strength is maintained or improved. The rest of the article walks through the relevant techniques, then gives file-level diffs you can apply and test.

What is “search” in a chess engine like Stockfish?

At its heart Stockfish is a search engine built on alpha-beta minimax with many extensions. The engine explores a game tree of legal moves and uses a static evaluation function at leaf nodes to estimate position strength. The central challenge is the exponential growth of the tree: doubling the depth multiplies the node count by an engine-dependent branching factor. The purpose of search improvements is to either visit fewer nodes for the same decision quality or visit the same nodes faster.

Key building blocks of modern high-performance search (present in Stockfish or its design lineage) include:

1. Alpha-beta pruning and principal variation (PV) search.

Alpha-beta reduces the effective branching factor by pruning branches that cannot affect the final decision. PV search and principal variation handling prioritise the expected best moves so that early cutoffs are more likely. The Stockfish codebase implements these in search.cpp, search(), and helper functions. (GitHub)

2. Transposition Table (TT).

A hash table (usually a Zobrist keyed TT) caches previously computed positions (from different move sequences that reach the same position). A TT lookup can produce immediate exact scores or bounds that allow cutoffs without deeper exploration. The TT is one of the most critical performance components: a well-sized and well-used TT reduces re-search and node count significantly. Stockfish’s tt.cpp contains the TT implementation; tuning its size and replacement strategy affects performance. (GitHub)

3. Move ordering heuristics.

Alpha-beta’s effectiveness depends heavily on searching good moves first. Typical ordering uses: the hash move (if present), captures ordered by SEE or MVV/LVA, promotions, killer moves, and a history heuristic for quiet moves. Stockfish’s movepick.cpp holds move picking logic. Better ordering yields more cutoffs and hence fewer nodes. (GitHub, Chessprogramming)

4. Forward pruning / selectivity.

Algorithms such as null-move pruning, late move pruning (LMP) and futility pruning attempt to prune “obviously bad” moves without full search. Null-move is extremely effective in practice but must be guarded (e.g. avoid in zugzwang or when side to move is in check). LMP prunes quiet moves after examining the first few. These techniques reduce nodes massively but must be tuned; the Stockfish wiki documents modern selectivity choices. (Chessprogramming, Stockfish)

5. Evaluation speed and caching (including NNUE).

Stockfish uses NNUE (efficiently evaluated neural network features) in modern versions. Evaluation cost matters: spending more time per node reduces NPS but if the evaluation is stronger it may allow deeper selective search with fewer nodes overall. The balance between evaluation complexity and search nodes is an overall performance trade-off. evaluate.cpp is the canonical file. (GitHub)

From an optimisation perspective, these components imply two complementary strategies:

- Lower the cost per node: micro-optimise bitboard operations, replace expensive function calls, use CPU intrinsics (

__builtin_popcountll,tzcnt/bsf), reduce branch mispredictions and ensure data locality (struct layout). These changes are typically localised inposition.cpp,evaluate.cppand low-level utility code. - Reduce the number of nodes visited: improve move ordering, TT hit rate, and pruning heuristics. These changes are mostly in

movepick.cpp,search.cpp, andtt.cpp.

A practical workflow is: (a) profile to find hotspots (often move generation, TT probe, or evaluation); (b) apply micro-optimisations where they are easiest and safest; (c) carefully tune heuristics (history counters, null-move depth reduction, LMP thresholds) and test with regression suites.

Why the transposition table and move ordering dominate: in alpha-beta search the number of cutoffs determines effective complexity. A good TT lowers repeat work, and good ordering makes cutoffs happen earlier. For this reason many real gains in chess engines come from carefully tuned ordering and replacement policies rather than sheer CPU tricks. The Stockfish documentation and community notes underline the relative importance of these subsystems. (GitHub, Chessprogramming)

Algorithmic optimisations that shrink the tree and improve effective speed

This section describes the high-level algorithmic levers you can tune or change in Stockfish. Each subsection ends with a short remark about risk vs. reward so you can prioritise changes to try first.

1. Transposition table policy and sizing

What to change and why. Increasing TT size increases the fraction of positions found in the table, reducing re-search. Replacement policy matters: storing depth and node type (exact, lower, upper) and preferring deeper nodes improves usefulness.

Concrete suggestions:

- Increase TT memory (if RAM permits). Stockfish uses command line options and code to allocate TT. Increasing

Hashvalue in UCI options or the TT allocation intt.cppimproves hit rate. (GitHub) - Prefer storing deeper entries and exact scores (i.e. replacement that keeps deeper or exact entries). Many engines use a two-way replacement or ageing scheme.

Risk vs reward: Low risk if you have RAM. Larger TT can permit larger caches but be mindful of NUMA or cache thrashing on many-core systems.

2. Move ordering: hash first, captures, promotions, history

What to change and why. Most cutoffs come from the hash move; ensure the TT provides the best move quickly. After that order captures by static exchange evaluation (SEE) or MVV/LVA and use a robust history heuristic for non-captures. Stockfish implements a sophisticated pipeline in movepick.cpp. (GitHub)

Concrete suggestions:

- Ensure the hash move lookup is early and cheap.

- Use a combined history/killer approach — in modern Stockfish the pure killer list has been revised in favour of history; check current code before reintroducing killers (community discussion shows killer’s marginal benefit in recent versions). (talkchess.com)

Risk vs reward: Low risk; ordering changes commonly improve speed without affecting strength.

3. Null-move pruning and safe R reduction

What to change and why. Null-move tests allow a shallow reduced search for the side that “passes” and can often cause a beta cutoff. The reduction parameter R (how many plies to reduce) and where null-move is disallowed are the knobs.

Concrete suggestions:

- Tune

Rdepending on depth, e.g.R = (depth > 6 ? 3 : 2)is a common heuristic. - Disallow null-move in zugzwang-like positions, when in check, or in late endgames.

The chessprogramming wiki explains the technique and caveats. (Chessprogramming)

Risk vs reward: Medium risk if R is too large (can skip critical tactical resources). Pair changes with regression matches.

4. Late Move Pruning (LMP) and futility pruning

What to change and why. LMP prunes quiet moves after the first k good moves have been searched; futility pruning trims moves near leaves when the static eval is sufficiently below alpha.

Concrete suggestions:

- Reduce LMP aggressiveness in tactical positions or set thresholds by depth and by whether the side to move is in check.

- Use futility only at very shallow depths (e.g. depth ≤ 3) and when evaluation is comfortably below alpha by a tuned margin.

Stockfish’s wiki describes current safe selectivity choices and implementation ideas. (Stockfish)

Risk vs reward: Medium to high risk when aggressive; test carefully.

5. Evaluation caching and lazy evaluation

What to change and why. Avoid re-computing full NNUE or expensive evaluation when a delta update is possible. Stockfish uses incremental updates (piece moves update features cheaply).

Concrete suggestions:

- Ensure incremental updates are used wherever possible; avoid full recompute on quiet moves.

- Cache small parts of evaluation that are expensive and only invalidate what changed.

Risk vs reward: Low risk if implemented correctly; can increase NPS noticeably.

File-level, code-targeted optimisations and example patches (search.cpp, movepick.cpp, tt.cpp, engine/main/uci)

Below I give concrete, small patches with original and proposed code and explain where to place them. Because the Stockfish repo evolves, I reference functions and provide GitHub links so you can verify the latest context. For long changes, I suggest tests you should run afterward.

Important: I do not provide wholesale rewrites of core algorithms. The aim is practical, incremental edits that are easy to apply and revert.

A. search.cpp: reduce expensive state copies and improve early returns

Context & target. Search::search() and inner search loops sometimes copy Position or StateInfo structures when a reference would suffice. Reducing copies and inlining small helper functions speeds node processing.

Where to find it: search.cpp (function Search::search() and Search::rootSearch() or similarly named functions). (GitHub)

Example change — original (illustrative):

// ORIGINAL (simplified illustration)

Score Search::search(Position pos, StateInfo si, int depth) {

// pos and si are passed by value (copied)

...

}

Modified — pass by reference / const where safe:

// PROPOSED

Score Search::search(Position& pos, StateInfo& si, int depth) {

// pass mutable references to avoid copies; only copy when necessary

...

}

Explanation & notes:

- In many real code areas Stockfish already uses references. If you find a function that copies, change to reference and only clone when a persistent snapshot is required. This reduces allocations and structure copies.

- After this change, ensure you update all callers to pass references (often trivial) and recompile.

Risk: Low if done carefully. Use the compiler to find affected call sites.

Citations: you can inspect current search.cpp on GitHub to locate copy sites. (GitHub)

B. movepick.cpp: simplify movepicker stages and remove redundant checks

Context & target. Modern Stockfish has several movepicker stages; some legacy stages (e.g. extra killer processing) add branching and complexity. Simplifying the move picking pipeline can reduce branch mispredictions and cycles.

Where to find it: movepick.cpp, functions that return next move, usually with an enum stage variable. (GitHub)

Example change — original (illustrative):

// ORIGINAL: many stages including killer lookup twice

while ((m = next_move())) {

if (stage == HASH) { ... }

if (stage == CAPTURES) { ... }

if (stage == KILLER_1) { ... } // extra stages

if (stage == KILLER_2) { ... }

...

}

Modified — merge killer stages and rely on history heuristic:

// PROPOSED

while ((m = next_move())) {

if (stage == HASH) { ... }

else if (stage == CAPTURES) { ... }

else { // quiet moves

// order by single history table, simpler and faster

...

}

}

Explanation & notes:

- The community discussions indicate modern engines sometimes simplify killer usage in favour of history heuristic. This reduces code paths and can slightly speed movepicking without losing strength. (talkchess.com)

- Profile before and after: movepicking should be faster and CPU cycles per node lower.

Risk: Low to medium. Behavioural change is minimal if history table is preserved.

C. tt.cpp: store depth and type compactly and prefer deeper entries

Context & target. TT probe/store are on the hot path. Ensure the TT stores depth and node type in a compact word and uses replacement policy favouring deeper entries.

Where to find it: tt.cpp, functions TT::probe() and TT::store(). (GitHub)

Example change — original (illustrative):

// ORIGINAL: simple replace by age

void TT::store(Key key, Value v, int depth) {

TableEntry& e = table[index(key)];

e.key = key;

e.value = v;

e.depth = depth;

e.age = current_age;

}

Modified — prefer depth and exact entries:

// PROPOSED

void TT::store(Key key, Value v, int depth, NodeType type) {

TableEntry& e = table[index(key)];

if (e.key == key) {

// overwrite existing

e.key = key; e.value = v; e.depth = depth; e.type = type; e.age = current_age;

} else {

// replacement policy: prefer to replace only if new depth >= old depth or old_age is old

if (depth >= e.depth || e.age != current_age) {

e.key = key; e.value = v; e.depth = depth; e.type = type; e.age = current_age;

}

}

}

Explanation & notes:

- Prefer storing deeper searches; prefer exact scores over bounds when possible. Two-probe or two-slot tables further improve hit rates.

- Test with varying TT sizes.

Risk: Low if replacement is conservative; high risk if you discard deep entries often.

D. main.cpp / engine.cpp / uci.cpp — safer defaults and less logging in timed modes

Context & target. Excessive logging or diagnostic output during timed searches adds overhead. Also provide sensible defaults for hash size, threads, and CPU affinity.

Where to find: main.cpp, engine.cpp, uci.cpp. (cocalc.com, GitHub)

Example change — original (illustrative):

// ORIGINAL: verbose logging each move/time

void Engine::think() {

log.info("starting search at depth ...");

...

}

Modified — reduce logs or gate by debug flag:

// PROPOSED

void Engine::think(bool debug) {

if (debug) log.info("starting search at depth ..."); // only if debug true

...

}

Also: set defaults in uci.cpp such as Hash = min(max(64, default_hash), max_available_ram) and sensible default Threads based on std::thread::hardware_concurrency().

Risk: Low. Removing logs lowers overhead, especially on Windows where IO is costlier.

Build, CPU and low-level micro-optimisations

This section focuses on compiler flags, CPU intrinsics and cache behaviour — the changes that often yield measurable NPS improvements without altering search semantics.

1. Compiler flags and optimisation level

- Build with

-O3or-Ofastonly after verifying correctness. For GCC/Clang,-march=nativeenables CPU-specific instructions (popcnt, tzcnt). - Use link time optimisation (

-flto) and function inlining hints (inline,__attribute__((always_inline))) for hot functions. - On MSVC use

/O2and appropriate machine code generation flags (e.g./arch:AVX2).

Example: In Stockfish’s Makefile or build scripts, ensure:

CXXFLAGS += -O3 -march=native -funroll-loops -flto

LDFLAGS += -flto

Caveat: -march=native produces binaries less portable across CPUs.

2. Use CPU intrinsics for bitboard ops

- Replace generic loops with

__builtin_popcountll()for GCC/Clang or_mm_popcnt_u64()intrinsic. These are typically implemented using hardwarepopcnt. - Use

bsf/tzcnt(__builtin_ctzll) to extract least significant bit, avoiding manual loops.

Example change (C++):

// ORIGINAL: manual popcount

int popcount(uint64_t x) {

int c = 0;

while (x) { x &= x - 1; c++; }

return c;

}

// PROPOSED: builtin

inline int popcount(uint64_t x) { return __builtin_popcountll(x); }

Benefit: Significant micro-optimisation on bitboard heavy code; commonly yields several percent NPS gains.

3. Data layout and cache friendliness

- Align frequently accessed arrays and structs to cache lines (

alignas(64)), avoid false sharing across threads. - Pack small fields to reduce structure size for TT entries and move lists.

Example: make TT::Entry compact:

struct alignas(16) TTEntry {

uint64_t key;

uint32_t value;

int16_t depth;

uint8_t flags;

};

Smaller entries => more entries per cache line => higher hit rate.

4. Threading: reduce locks and use thread-local structures

- Minimise shared mutable state. Make history tables per-thread or use lock-free updates.

- Use thread pools and avoid spinning on locks; prefer atomic flags and per-thread buckets.

Example: convert shared history arrays to per-thread history that is merged asynchronously or at synchronization points.

Risk: Concurrency changes are tricky. Test with race detection and stress tests.

5. Profile-driven changes

Always profile with realistic workloads (fixed depth searches, real game positions). Hotspots frequently are:

- move generation and iteration,

- TT probe/store,

- evaluation (NNUE passes),

- movepicker code.

Use perf (Linux), VTune, or Windows Performance Analyzer. After each recommended optimisation, re-run the same profile to quantify gains.

Testing, benchmarking and safety

Any change, from a one-line micro-optimisation to an algorithm tweak, must be validated. Here is a practical testing plan.

1. Local reproducible benchmarks

- Build a baseline binary with no changes. Measure:

- NPS and nodes for several representative positions.

- Wall clock for fixed-depth searches (e.g. depth 12 for the same FEN list).

- Time to mate or tablebase solve if applicable.

- After each change, re-run the exact same tests. Use scripts to automate and store outputs (timestamped logs).

Stockfish contains a tests directory and regression scripts; use these as a starting point. (GitHub)

2. Self-play ELO testing

For algorithmic changes (pruning thresholds, LMP aggressiveness), run automated self-play matches (e.g. 1000s of short games or many repeated matches) to see ELO impact. Use the official regression testing harness if available.

3. Memory and crash testing

- Run stress tests (many simultaneous searches) to catch leaks or races.

- On multi-socket systems, test NUMA behaviour; ensure TT allocations and thread affinities don’t thrash memory.

4. Version control and small increments

- Make small, reversible changes. One change per branch/PR makes bisecting easy.

- Keep a changelog of measured effects on NPS and node counts.

5. Safety checklist before merging wide changes

- Are unit tests passing?

- Do regression results show no statistically significant loss?

- Is the code thread-safe and free of UB?

- Has the change been reviewed by another developer or peer?

Bibliography and resources

Primary sources consulted:

- Stockfish official repository (master):

src/(includingsearch.cpp,movepick.cpp,tt.cpp,evaluate.cpp,main.cpp,uci.cpp). (GitHub) - Stockfish developer documentation and wiki (search and selectivity pages). (Stockfish)

- Chessprogramming.org — Null Move Pruning and History Heuristic pages (authoritative practical descriptions). (Chessprogramming)

- Community discussions and release notes for configuration choices and recent changes (Stockfish releases and forum threads). (GitHub, talkchess.com)

- Academic and tutorial material on building chess engines and TT/heuristics (various theses and guides referenced by the community). (Vrije Universiteit Amsterdam, Stack Overflow)

Conclusion

To finish, here is a pragmatic, prioritised checklist you can follow. Each item includes expected risk and which file(s) are most directly involved.

Tier 1 — low risk, quick wins

- Profile first. Measure baseline NPS and node counts. (No code change.) Files/tools: none — Risk: 0.

- Replace hand-rolled bit ops with intrinsics. Use

__builtin_popcountll,__builtin_ctzll. Files: bitboard utilities, position.cpp — Risk: 0–1. - Reduce logging during timed searches. Gate logs behind a debug flag. Files:

engine.cpp,main.cpp,uci.cpp— Risk: 0. - Increase TT memory if hardware allows. Set a larger default Hash; confirm behaviour. Files:

tt.cpp, UCI option handling inuci.cpp— Risk: 0.

Tier 2 — medium risk, high reward

5. Tune move ordering parameters. Ensure hash move is promoted and quiet moves use a strong history heuristic. Files: movepick.cpp, search.cpp — Risk: 1. (GitHub)

6. Conservative null-move parameter tuning. Keep null-move but adjust R by depth; disallow in known risky situations. Files: search.cpp — Risk: 2. (Chessprogramming)

7. Compact the TT entry and prefer deeper/exact entries in replacement. Files: tt.cpp — Risk: 1. (GitHub)

Tier 3 — higher risk / complex

8. Change forward pruning aggressiveness (LMP / futility). Only after self-play testing. Files: search.cpp, movepick.cpp — Risk: 3. (Stockfish)

9. Per-thread history tables and lock removal. Increases concurrency but must be validated. Files: search.cpp, engine.cpp — Risk: 3.

10. Architecture-specific intrinsics and vectorisation (AVX2, AVX512) for evaluation where safe. Files: evaluate.cpp — Risk: moderate.

Final recommendations:

- Measure continuously. Do not rely on intuition alone. Small micro-optimisations stack but can be measured and reverted quickly.

- Keep changes atomic (one logical change per branch) and maintain a short test harness to run baseline FENs and the Stockfish test suite. (GitHub)

- Respect the playing strength metric. For algorithmic changes, ELO/regression testing must be the final arbiter. Some aggressive speedups can actually reduce strength if they prune tactical possibilities.

- Read Stockfish dev discussions when in doubt: the community has a strong culture of profiling and careful tuning; many heuristics are the result of extensive automated testing. (Stockfish)

If you’d like, I can:

- produce a patch file (diff/patch) containing the small example edits above targeted to the current Stockfish master (I will fetch the current files and produce a ready

git-format-patch), or - prepare a test script (bash / PowerShell) that automates baseline measurement (runs a set of FENs at fixed depth, collects NPS and nodes) so you can measure before/after quickly, or

- implement a concrete example change (e.g., replace a hand-written popcount and produce the compiled binary) and show the measured NPS difference on a sample position.

Tell me which of the three you prefer and I’ll fetch the exact file contexts from the Stockfish master branch, create the patch and include the exact hunks with accurate function headers and line contexts so you can apply them with Notepad++ or git apply.

Jorge Ruiz Centelles

connoisseur of both chess and anthropology, a combination that reflects his deep intellectual curiosity and passion for understanding both the art of strategic.