Building a UCI NNUE Chess Engine

This article presents a complete, end-to-end roadmap for building a modern chess engine in C++ that speaks the Universal Chess Interface (UCI) and evaluates positions using NNUE (Efficiently Updatable Neural Networks). We begin with protocol and software design, continue through board representation and move generation, formalise search and time management, and then integrate an NNUE evaluator with accumulator updates and integer-domain inference. We conclude with training guidance, benchmarking, correctness testing, and production hardening. Key references are provided to the Chess Programming Wiki (UCI and NNUE), the UCI summary on Wikipedia, and Stockfish NNUE resources and documentation. (chessprogramming.org)

1) What “UCI + NNUE in C++” Really Means

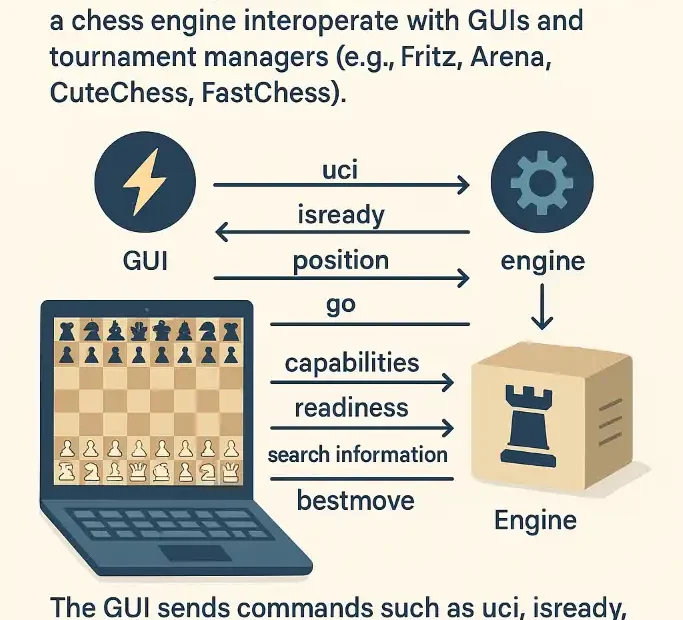

UCI is a text protocol over stdin/stdout that lets a chess engine interoperate with GUIs and tournament managers (e.g., Fritz, Arena, CuteChess, FastChess). The GUI sends commands such as uci, isready, position, and go, and the engine responds with capabilities, readiness, search information, and a bestmove. The protocol’s enduring popularity stems from its simplicity, portability, and clear division of labour between GUI and engine. (chessprogramming.org)

NNUE is a CPU-friendly neural-network evaluation first used in Shogi and later adopted by chess engines like Stockfish. It exploits the fact that board states change incrementally between plies; the evaluator caches partial results (an “accumulator”) and updates them cheaply after a move or unmove. Networks are quantised (int8/int16) and fed HalfKP features (king-relative piece-square encodings). The result is a strong, fast evaluation that pairs excellently with alpha–beta search. (chessprogramming.org)

2) Minimal Feature Set for a First Release (MVP)

- Protocol: UCI loop with

uci,isready,ucinewgame,position [startpos|fen ...] moves ...,go [movetime|wtime btime winc binc depth nodes],stop,quit. Optional:setoption name ... value .... (chessprogramming.org) - Board core: Bitboards, legal move generation (including castling, en passant, promotions), make/unmake moves, Zobrist hashing.

- Search: Negamax with alpha–beta; iterative deepening; transposition table (TT); MVV-LVA captures; simple history/killers; aspiration windows.

- Evaluation: NNUE accumulator, incremental update on make/unmake; integer inference; fallback PSQT as a safety net. (official-stockfish.github.io)

- Timing: Basic time manager with safety margins; node counting; stop flags.

- Testing:

perftfor move-gen correctness; self-play smoke tests; FEN loader; UCI compliance checks.

This scope is realistic for an initial engine while leaving room for later enhancements (TBs, SMP, late move pruning, razoring, etc.).

3) Project Layout and Build System

A clean layout accelerates iteration and testing:

/engine

/src

uci/ # protocol and options

core/ # bitboards, movegen, hash, zobrist

search/ # iterative deepening, time mgmt, tt

eval/ # nnue/, psqt/

nnue/ # layers, accumulator, quantization

util/ # logging, endian, bitops, simd wrappers

/include

/tests # perft, unit tests

/nets # *.nnue (not tracked by git LFS)

CMakeLists.txt

README.md

LICENSE

- CMake: provide

-O3, link-time optimisation (LTO), and per-target SIMD flags (e.g.,-msse4.1,-mavx2,-mbmi2), but allow a baseline build. - Consider

-fno-exceptions/-fno-rttiin inner loops; prefernoexceptand POD types in movegen and eval hot paths.

4) UCI: Parsing, Options, and I/O Discipline

UCI engine skeleton (C++17+):

int main() {

std::ios::sync_with_stdio(false);

std::cin.tie(nullptr);

Engine engine; // owns board, searcher, eval, TT, options

std::string line;

while (std::getline(std::cin, line)) {

if (line == "uci") engine.cmdUci(); // print id, options, uciok

else if (line == "isready") engine.cmdIsReady(); // print readyok

else if (line.rfind("setoption",0)==0) engine.cmdSetOption(line);

else if (line == "ucinewgame") engine.cmdUciNewGame();

else if (line.rfind("position",0)==0) engine.cmdPosition(line);

else if (line.rfind("go",0)==0) engine.cmdGo(line);

else if (line == "stop") engine.cmdStop();

else if (line == "quit") break;

// Optional: "perft N" and "bench"

}

}

- Never buffer output silently: flush

std::cout << std::flushfor lines likebestmove e2e4. - Options: expose

Hash,Threads,OwnBook(optional),UseNNUE,EvalFile(path to network). Document default values and ranges inuciresponse. See Stockfish’s UCI commands overview for established patterns. (official-stockfish.github.io) - Positions: support

startposand arbitrary FEN. Afterposition ... moves ..., the engine should reproduce the final state deterministically.

Background references for protocol semantics: Chess Programming Wiki’s UCI page and Wikipedia overview. (chessprogramming.org)

5) Board Representation and Move Generation

5.1 Bitboards

Represent each piece set with a 64-bit integer. Typical layout:

- 12 bitboards: {white/black} × {pawn, knight, bishop, rook, queen, king}.

- Side-to-move bit, castling rights, en-passant file, half-move clock, full-move number.

- Precompute attack masks (knights, kings, pawn pushes/captures). Use magic bitboards or PEXT-based sliding attacks for bishops/rooks.

5.2 Legal Move Generation

- Generate pseudolegal moves; then filter by king-in-check legality (or inlined pinned-mask technique).

- Handle special moves: promotions, castling (check rook paths), en passant (verify not leaving king in check).

- Maintain Zobrist hash on make/unmake for TT indexing; update piece, side, castling, EP keys per move.

5.3 Correctness: perft

Implement perft(depth) to enumerate all legal nodes for a position. Validate against known test suites (Kiwi Pete, etc.). Perft is your first defence against illegal moves corrupting search.

6) Search: Iterative Deepening, Alpha–Beta, and Ordering

6.1 Core Loop

- Iterative deepening: search depth 1…N; at each iteration, remember principal variation (PV) and last best move.

- Negamax alpha–beta: single-PV first, then PV-split if you add SMP.

- Move ordering (crucial for pruning efficiency):

- PV move from previous iteration

- TT move on a hash hit

- Captures scored by MVV-LVA or SEE

- Killers (two per ply)

- History heuristic (side×from×to counters)

6.2 Pruning & Reductions (later, but plan ahead)

- Null-move pruning, late move reductions (LMR), futility pruning, razoring, singular extensions (advanced).

- Transposition table: 64-byte entries (key, depth, score, flag, move, static eval). Remember mate score conventions (e.g., ±MATE-in-n).

- Quiescence: captures and checks (start with captures only), with delta pruning and TT use.

6.3 Time Management

- From

goparameters, compute a budget: ifwtime/btimepresent, slice a fraction (e.g., 1/20 with soft and hard caps); ifmovetimegiven, obey strictly. - Install stop flags checked in the node loop; respect

stop/quit. - On “unstable” PV (big swings), spend a bit more time within caps.

7) The NNUE Evaluator: Theory to Practice

7.1 Why NNUE

Traditional handcrafted evals capture domain heuristics (material, mobility, king safety). NNUE learns these interactions from huge datasets yet remains CPU-friendly by keeping fully connected layers and an incrementally updatable accumulator keyed by features like HalfKP. NNUE evaluations are used by the alpha–beta search just like a scalar score. (Stockfish)

7.2 Feature Encoding: HalfKP

HalfKP encodes (our_king_square, piece_square, piece_type, piece_color), producing a sparse binary feature vector that activates a small subset of inputs. It is king-relative—anchored on the side to move’s king—improving generalisation and locality. (Chess Stack Exchange)

Practical notes:

- Maintain two accumulators (side to move and opponent) or a symmetric scheme, depending on the network definition you adopt.

- Feature toggles on make/unmake: for each moved/captured/promoted piece and king square changes (castling), add/remove the corresponding input contributions to the accumulator.

7.3 Network Architecture and Quantisation

Typical chess NNUE uses 4 fully connected layers (e.g., input → 1st hidden → 2nd hidden → output) with Clipped ReLU activations. Inference runs in int8/int16 for throughput; training in floating point; then exported and quantised. The Stockfish docs emphasise integer-domain inference as the key to speed. (official-stockfish.github.io)

Key components you’ll implement:

- Weights loader for a

.nnuefile format you document (or adopt a de-facto standard). - Affine + activation kernels using vector intrinsics (SSE2/SSE4.1/AVX2/AVX-512 if available) but with scalar fallbacks.

- Accumulator state that stores the pre-activation sums of the first hidden layer so that updates are

O(changed_features)per move.

7.4 C++ Interface

namespace nnue {

struct NetworkDesc {

int input_dim, h1, h2, out;

bool clipped_relu;

// scale/zero-point for quantization, etc.

};

class Accumulator {

public:

// first hidden layer sums for both sides

std::array<int16_t, MAX_H1> sum_us, sum_them;

void reset(const Board& b);

void update(const Board& before, const Move& m, const Board& after);

};

class Evaluator {

public:

bool loadNetwork(const std::string& path);

int eval(const Board& b, Accumulator& acc) const; // returns centipawns

private:

NetworkDesc desc;

std::vector<int8_t> W1, W2, W3; // packed weights

std::vector<int32_t> B1, B2, B3;

};

} // namespace nnue

Workflow:

- On

ucinewgameor after loading a new FEN, callacc.reset(board). - On

makeMove, callacc.update. - At evaluation time,

eval(board, acc)consumes the accumulator, applies the remaining layers, and returns a scaled centipawn score (be consistent with search score conventions).

7.5 Integer Arithmetic and Scaling

- Adopt a single global scale from int domain to centipawns (e.g., a fixed-point multiplier after the last layer).

- Clamp activations with Clipped ReLU to match the network’s training bounds.

- Ensure determinism across platforms: avoid UB, use fixed rounding, and unit-test kernels with golden outputs.

7.6 Fallback / Hybrid Evaluation

Early on, keep a minimal PSQT and material term as a backstop if the network fails to load. You can also linearly mix NNUE with handcrafted terms during development (e.g., 90% NNUE + 10% material in edge cases) and remove the mix once stable.

8) Wiring NNUE into Search

- At the start of each node, if not in quiescence or if you prefer, call

eval(b, acc). - Use TT to store static eval; reuse it in null-move pruning and reductions.

- Ensure make/unmake integrates with the accumulator updates; never recompute from scratch inside the node unless debugging.

9) Training and Dataset Generation (Practical Overview)

While you can ship an engine with an existing network, training your own NNUE is instructive:

- Generate positions and labels: self-play or large game databases; label targets as search evaluations at fixed depth (e.g., D=8/10) or as game outcomes with bootstrapping. Stockfish’s NNUE post describes training on “millions of positions” with moderate search depth outputs. (Stockfish)

- Feature extraction: write a generator that encodes HalfKP features per position; store as sparse indices and signs for efficient batching.

- Architecture: 4-layer MLP with clipped ReLU; train in PyTorch or similar; regularisation and label smoothing help.

- Quantisation: post-training quantisation to int8/int16; export weights and biases into your

.nnuecontainer with metadata (dims, scales, zero-points). Stockfish’s docs emphasise integer-domain inference for speed. (official-stockfish.github.io) - Validation: monitor MSE on held-out positions and run quick gauntlet matches to estimate Elo. Background discussions on HalfKP and training volumes appear in community Q&A and forums. (Chess Stack Exchange)

For the historic and theoretical base of NNUE, consult Yu Nasu’s paper (English translation available). (Oscar’s blog)

10) Time Manager: From Toy to Practical

- If

movetimeis specified, use it as the strict hard cap. - Otherwise, compute a per-move budget:

- Soft = (remaining_time / moves_to_go_guess) × factor

- Hard = Soft × 1.5 (never exceed).

- Spend slightly more on tactical instability (PV changes, fail-high/fail-low oscillations), but always honour hard caps.

- Use nodes and elapsed time checks inside the search to react to

stopquickly.

11) Transposition Table (TT) Implementation Notes

- Index TT with 64-bit Zobrist key; consider a power-of-two table size and store multiple entries per bucket (e.g., 4-way).

- Entry fields:

key16,move16/32,depth8,flag2 (EXACT/LOWER/UPPER),score16 (packed),eval16,age. - On store, prefer replacing shallow entries or older ages; on probe, adjust mate scores from search ply to root ply.

12) Quiescence Search and Evaluation Stability

- Start with captures-only quiescence; optionally add checks later.

- Use SEE or delta pruning to prune obviously losing captures.

- Keep evaluation consistent: NNUE at leaves, not mid-node to avoid double counting.

- Cache static evals in TT to reduce NNUE calls in quiescence.

13) Parallel Search (SMP): Optional First Steps

Parallel search introduces non-determinism and complexity (split points, work stealing). For an MVP:

- Start single-threaded.

- Add a simple lazy SMP (multiple root searches with shared TT) later, or adopt a split-point framework when your single-thread is stable.

14) Testing: Correctness, Performance, and Strength

- Correctness

- Perft vs canonical values (depth 1…6).

- FEN loader round-trips; hash keys stable under make/unmake sequences.

- Accumulator self-consistency:

reset(board)equals repeatedupdatefrom the initial position.

- Performance

- Bench mode: fixed FEN set, fixed depth; report nodes/s and wall time.

- NNUE microbenchmarks: first layer kernel throughput; accumulator update cost per move.

- Build matrix (SSE2 baseline, SSE4.1, AVX2, AVX-512).

- Playing Strength

- Short self-play at various time controls; gauntlets vs reference engines; use Elo estimation tools (e.g., Ordo, BayesElo).

- Ensure UCI compliance in logs; try mainstream GUIs (Fritz, Arena) that expect UCI behaviour. (Soporte de ChessBase)

15) Security, Determinism, and Portability

- Avoid undefined behaviour (strict aliasing, uninitialised reads).

- Use fixed-width types (

int8_t,int16_t,int32_t) in NNUE paths. - Normalise endianness for weight loads.

- Provide reproducible builds: pin compiler versions and flags; include a

--version/idline inuciresponse.

16) Extending the Engine (Roadmap)

- Search enhancements: PVS, LMR tuning, move count pruning, SEE-based ordering, TT refits.

- Evaluation: additional feature heads (tempo, phase), or multiple networks (opening vs endgame) blended by game phase.

- Tablebases: Syzygy WDL/DTZ integration (evaluation boosts in late endgames).

- Book learning: opening books and experience files (optional). Background on books in engines can contextualise later features. (Wikipedia)

17) Worked Code Sketches

17.1 UCI Command Handling

void Engine::cmdUci() {

std::cout << "id name MyNNUEEngine 0.1\n";

std::cout << "id author Your Name\n";

std::cout << "option name Hash type spin default 64 min 1 max 4096\n";

std::cout << "option name Threads type spin default 1 min 1 max 256\n";

std::cout << "option name UseNNUE type check default true\n";

std::cout << "option name EvalFile type string default nn-000000.nnue\n";

std::cout << "uciok\n" << std::flush;

}

void Engine::cmdIsReady() { std::cout << "readyok\n" << std::flush; }

void Engine::cmdPosition(const std::string& s) {

parser_.setPosition(board_, s); // handles startpos/FEN + moves

nnueAcc_.reset(board_);

}

void Engine::cmdGo(const std::string& s) {

Limits lim = parseGo(s);

SearchResult r = search_.iterate(board_, nnueAcc_, lim, options_);

std::cout << "bestmove " << Uci::moveToStr(r.best) << "\n" << std::flush;

}

17.2 Search Outline

SearchResult Search::iterate(Board& b, nnue::Accumulator& acc,

const Limits& lim, const Options& opt) {

timer_.start(lim);

Move best = MOVE_NONE;

for (int d = 1; !timer_.expired() && d <= lim.maxDepth; ++d) {

Score alpha = -INF, beta = INF;

PV pv;

Score sc = negamax(b, acc, d, alpha, beta, 0, pv);

if (timer_.expired()) break;

best = pv.line[0];

std::cout << "info depth " << d

<< " score cp " << sc.cp()

<< " nodes " << nodes_

<< " time " << timer_.elapsedMs()

<< " pv " << pv.toString() << "\n";

}

return {best};

}

17.3 NNUE Evaluation Stub (Integer Domain)

int nnue::Evaluator::eval(const Board& b, Accumulator& acc) const {

// 1) acc contains first-layer pre-activations (int16)

// 2) apply clipped ReLU and quantized matmul to next layers

alignas(64) int16_t h1[MAX_H1];

for (int i = 0; i < desc.h1; ++i) {

int x = acc.sum_us[i] - acc.sum_them[i] + B1[i];

h1[i] = std::max(0, std::min(CLIP_MAX, x));

}

// 3) Fully connected to h2 (int8 weights/int32 accumulators) ...

// 4) Output layer -> scale to centipawns

int32_t out = affine(h1, W2, B2, desc.h1, desc.h2); // pseudocode

out = clipped_relu(out);

int32_t score = affine_scalar(out, W3, B3); // pseudocode

return (int)(score * FINAL_SCALE); // centipawns

}

(In production you will pack weights (e.g., row-major, 32-aligned), unroll loops, and use intrinsics for throughput.)

18) Documentation and SEO Considerations

To make this article SEO-friendly and developer-useful:

- Include concise definitions of UCI, NNUE, HalfKP, alpha–beta, and bitboards.

- Use descriptive headers and keyword variants naturally: “UCI chess engine in C++”, “NNUE accumulator”, “HalfKP features”, “incremental evaluation”, “alpha–beta search implementation”.

- Link (with citations) to authoritative sources: UCI summaries, NNUE introductions, and Stockfish docs. (chessprogramming.org)

- Provide code fragments that readers can paste and extend; keep them minimal and correct.

19) Frequently Encountered Pitfalls (and Remedies)

- Illegal move bugs revealed by

perftmismatches → instrument pins/checks logic; unit-test castling and EP across checks. - Accumulator desync after unusual moves → add assertions:

reset(board)must equal a cumulativeupdatereplay; fuzz with random move sequences. - TT corruption (weird PVs, oscillations) → verify key storage, depth bounds, and mate score normalisation.

- Time forfeits → prioritise responsiveness to

stop; place checks in node hot paths; raise thread priority only with care. - Platform divergence → deterministic integer kernels; avoid

floatin inference; lock down compiler flags.

20) Licensing and Distribution

- If you train on public data or derive from open engines, respect their licences (e.g., GPLv3 in the case of Stockfish).

- Distribute networks separately when required, and state their provenance.

- Provide a

READMEwith UCI options, command-line switches, and a bench/perftguide. - For GUIs, advertise compatibility with mainstream UCI hosts (Fritz, Arena) that accept UCI engines. (Soporte de ChessBase)

21) References (selected)

- UCI protocol primers and background: Chess Programming Wiki; Wikipedia. (chessprogramming.org)

- NNUE concept, history, and integration: Chess Programming Wiki (NNUE, Stockfish NNUE), Stockfish blog post introducing NNUE, Stockfish NNUE docs (quantisation/integer inference), Yu Nasu’s paper (English PDF/translation). (chessprogramming.org)

- HalfKP explanation and community notes: Chess StackExchange/ TalkChess threads; university project notes. (Chess Stack Exchange)

22) A Step-by-Step Checklist You Can Follow

- Scaffold the repository (layout above) and add CMake with release flags.

- Implement bitboards + perft until results match canonical positions.

- Add Zobrist hashing, TT, and iterative deepening; print UCI

infolines. - Integrate NNUE:

- Define a minimal

.nnuecontainer (header with dims/scales + packed weights). - Write loader, accumulator, and int-inference kernels; add unit tests comparing against a reference Python implementation.

- Expose

UseNNUE/EvalFileUCI options.

- Define a minimal

- Connect evaluation to search; ensure make/unmake updates the accumulator.

- Time manager and stop flags; test under

movetime/wtime btime. - Run perft again (guard rails), then smoke-test against known engines.

- Optimise (SIMD, cache locality, unrolls), measure wall-clock gains; protect correctness with tests.

- Document:

README, UCI options, a short “quick start,” and your licence. - Publish a first binary and gather feedback.

23) Conclusion

A competitive UCI NNUE engine in C++ is a tractable project if you proceed methodically: first, a bullet-proof board core and UCI loop; second, a well-ordered alpha–beta search; third, a robust NNUE evaluator with correct accumulator updates in integer arithmetic. The protocol specification and community conventions make integration with GUIs straightforward, while modern NNUE techniques deliver strength without GPU dependencies. Start small, test obsessively (especially perft and accumulator consistency), and iterate: every correctness fix and micro-optimisation compounds, and each training cycle of your network will unlock further Elo.

For deeper reading and alignment with community standards, keep the canonical UCI and NNUE resources at hand as you implement and refine your engine. (chessprogramming.org)

Appendix A: Example uci Output (minimal)

id name MyNNUEEngine 0.1

id author Your Name

option name Hash type spin default 64 min 1 max 4096

option name Threads type spin default 1 min 1 max 256

option name UseNNUE type check default true

option name EvalFile type string default nn-000000.nnue

uciok

Appendix B: Example go Handling

- If

movetimepresent: setsoft = hard = movetime. - Else if

wtime/btimepresent:- guess

mtg = 30if not provided;soft = (wtime / mtg) * 0.9(or btime for Black). hard = soft * 1.5with absolute cap (e.g., 50% of remaining).

- guess

- Honour

winc/bincby adding a fraction to soft/hard.

Appendix C: NNUE File Header (example design)

struct NnueHeader {

char magic[8]; // "NNUEFILE"

uint32_t version; // 1

uint32_t input_dim, h1, h2, out;

int32_t scale_out; // fixed-point scale to cp

// possibly per-layer zero-points/scales if using asymmetric quant

uint8_t reserved[32];

};

(Document the format publicly so others can produce compatible networks.)

Key sources cited: UCI protocol references and summaries (Chess Programming Wiki; Wikipedia), foundational NNUE descriptions and Stockfish implementation notes (Chess Programming Wiki, Stockfish blog and docs), and HalfKP explanations. (chessprogramming.org)

.

Jorge Ruiz

connoisseur of both chess and anthropology, a combination that reflects his deep intellectual curiosity and passion for understanding both the art of strategic chess books